Before You

Begin

Before You

Begin

In this 10-minute tutorial, you learn how to use Oracle Big Data Manager to ingest external data, and back up data using Oracle Object Store Classic.

Background

Oracle Big Data Manager provides a browser-based GUI to simplify data transfer tasks. You can use the Data explorer feature in Oracle Big Data Manager to upload local files to HDFS, and transfer files between a variety of storage providers.

What Do You Need?

- Save the taxidropoff.csv dataset file on your local machine.

- Access to an instance of Oracle Big Data Cloud Service and the required login credentials.

- Access to Oracle Big Data Manager on a the same Oracle Big Data Cloud Service instance and the required administration login credentials.

- A port must be opened to permit access to Oracle Big Data Manager, as described in Enabling Oracle Big Data Manager.

Access the Oracle Big Data Manager Console

Access the Oracle Big Data Manager Console

- Sign in to Oracle Cloud and open your Oracle Big Data Cloud Service console.

- In the Oracle Big Data Cloud Service console, find the row for your instance (cluster) and click the Manage this service icon.

Description of the illustration bdcs-console.jpg - In the Manage this service context menu, select Oracle Big Data Manager console to display the Oracle Big Data Manager Sign In page.

- Sign in using the required Oracle Big Data Manager administrative credentials to display the Oracle Big Data Manager Home page.

Register Object Storage Classic as a Storage Provider

Register Object Storage Classic as a Storage Provider

- Using the Oracle Big Data Manager menu options, select Administration > Storage Providers. The available Storage Providers are displayed in a list, as shown below.

Description of the illustration bdmr2.jpg - Click Register new storage to launch the Register new storage wizard, as shown below.

- Enter oci-osc as the Name and Object Storage Classic as the Descxription. Then select Oracle Cloud Infrastructure Object Storage Classic as the Storage type and click Next.

- In the Storage Details step, enter the Storage URL and Tenant values for the selected storage provider. Then, enter the required username and password and click Test access to storage. If the test is successful, a preview of the storage content is displayed, as shown below. Then, click Next.

- In the Access step, select which users can see this storage in Big Data Manager. Shuttle the user or users from the available list on the left to the selected list on the right. In this example, we select the bigdatamgr user. Then, click Next.

Description of the illustration storprov5.jpg - In the Confirmation step, the selected storage details are shown. Click Register to complete the process.

Description of the illustration storprov6a.jpg

Notes: The hdfs storage provider, highlighted in the list above, is registered by default when you create your Big Data Cloud Service Instance. In this case, the installer also registered hive as an additional storeage provider. There are a variety of other storage providers that may be registered, including Amazon S3, Github, Oracle database and MySQL database.

Ingest Data Using Big Data Manager

Ingest Data Using Big Data Manager

In this section, you upload external data to HDFS for processing using Big Data Manager.

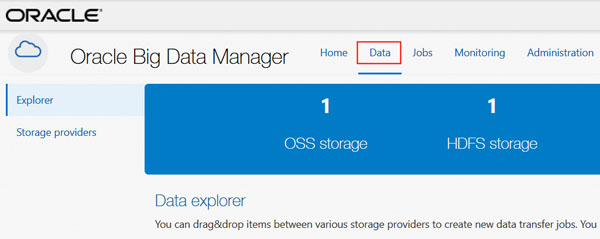

- On the Oracle Big Data Manager page, click the Data tab. By default, the Explorer page is displayed.

Description of the illustration data-tab.jpg - Navigate to the

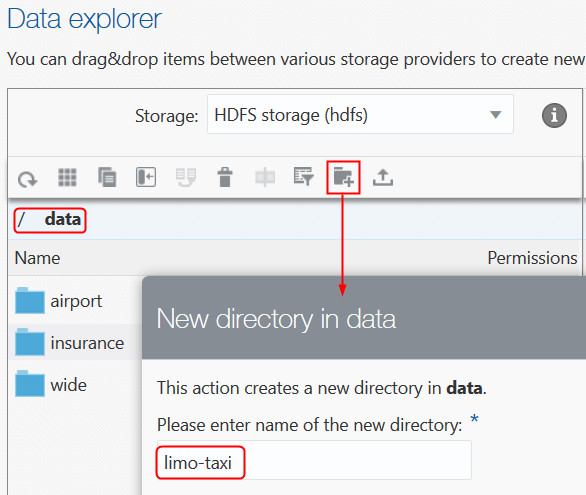

/datadirectory, click New Directory on the toolbar and create a directory named tax-limo as shown here.

on the toolbar and create a directory named tax-limo as shown here.

Description of the illustration create-new-dir.jpg - Navigate to the

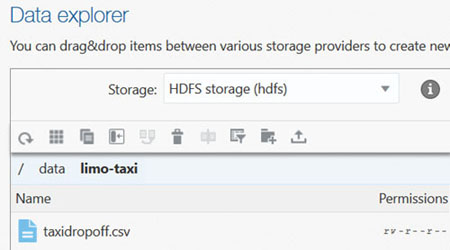

/data/limo-taxidirectory and click File Upload on the toolbar. Then use the Files Upload dialog to select the taxidropoff.csv data file that you saved locally and click Upload. When the file upload process is complete, close the Files upload dialog.

on the toolbar. Then use the Files Upload dialog to select the taxidropoff.csv data file that you saved locally and click Upload. When the file upload process is complete, close the Files upload dialog.

Note: HDFS storage (hdfs) is selected in the Storage drop-down list In the left portion of the Data explorer section.

Result: The HDFS storage pane should look like this:

Notes:

The local file upload feature used in this tutorial only supports data files of up to 1GB. You can ingest much more substantial data volumes by using the Oracle Cloud Storage CLI or HTTP end point. For practice on copying data from HTTP(s) servers using Oracle Big Data Manager, see the following tutorial:

Copying Data from an HTTP(S) Server with Oracle Big Data Manager

Once you upload data, you can work on the data in HDFS, or you can copy the data to any other registered storeage provider in order to further analyze the data. For practice on copying and analyzing data using Big Data Manager, see the following tutorial:

Back up Data Using Object Storage Classic

Back up Data Using Object Storage Classic

In this section, you back up the taxidropoff.csv data file to Oracle Object Storage Classic.

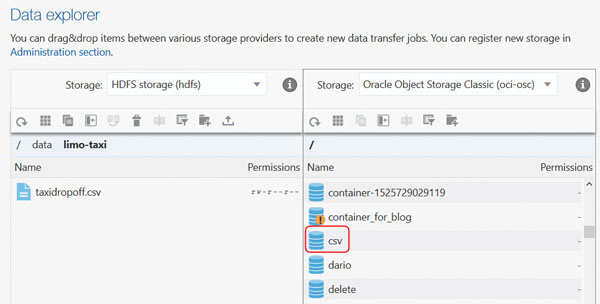

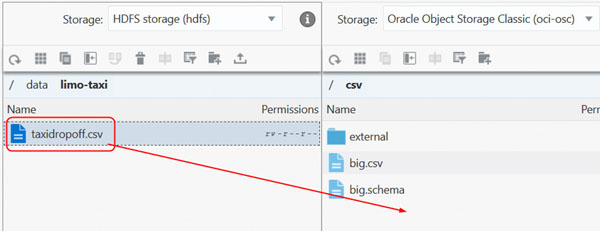

- In the Data explorer section of the Oracle Big Data Manager page, ensure that the HDFS storage (hdfs) storage pane is displayed on the left and the Oracle Object Storage Classic storage pane is displayed on the right.

- Scroll down in the Oracle Object Storage Classic pane and find the csv container, as shown below.

Description of the illustration hdfs-osc1.jpg - Open the

/csvcontainer. Then drag the taxidropoff.csv file from the hdfs storage pane to the/csvcontainer in the oci-osc storage pane, as shown below.

Description of the illustration hdfs-osc2.jpg - The New copy data job dialog box opens, providing an overview of the copy job, as shown below. Click Create.

Description of the illustration hdfs-osc3.jpg - A Data copy job dialog box opens, providing the status and progress of the copy job. When the copy job finishes, close the Data copy job dialog.

Result: A back-up copy of taxidropoff.csv appears inthe

/csvcontainer of Oracle Object Store Classic.

Description of the illustration hdfs-osc4.jpg Note: You can use the same technique to back up objects from other registered storage providers to Oracle Object Store Classic.

- When you finished with this session, close Oracle Big Data Manager and close your Oracle Big Data Cloud service.

Ingesting and Backing Up Data With Oracle Object Store Classic

Ingesting and Backing Up Data With Oracle Object Store Classic