How to Save Power on SPARC T5, M5, M6, T7, M7 and S7 Servers

by Julia Harper

Published July 2013 (updated August 2013, January 2014 and December 2016)

Save power on Oracle's SPARC T5, M5, M6, T7, M7 and S7 servers with power policies and power caps that enable hardware low-power states.

- Introduction

- Power Management Policies

- Power Capping

- Observing Power Consumption

- Use Cases and Scripts

- How Power Management Works

- See Also

- About the Author

Introduction

You can save power on SPARC systems with the click of a button!

This article presents techniques for observing and saving power through monitoring tools, power management policies, and power caps. It also explains the underlying hardware capabilities and software features that enable the power savings. Reducing server power consumption allows you to reduce your data center's infrastructure power consumption, which saves you money (and helps the environment).

Note: In addition to applying to SPARC T5, M5, and M6 servers, the power saving policies discussed in this article can also be applied to SPARC M7, T7, and S7 servers; any differences are highlighted inline.

Power Management Policies

You can reduce system power consumption by tens to hundreds of watts when you enable the right power management policy. For example, on a SPARC T5-2 server from Oracle with 255 gigabytes of memory, we measured a savings of 235 watts (27 percent) on an idle system after enabling the system's power savings features.

Power management policies can be set for the platform and for each logical domain running Oracle Solaris 11.

Platform Power Management Policies

There are three platform power management policies:

-

Elastic policy—Hardware low-power states are used that trade performance for power savings. Use the elastic policy when:

- The system will be idle for a while, such as overnight or on the weekend.

- Work comes and goes on the system, and some latency in completing that work is tolerable for overall power savings.

- The system runs flat out for long periods of time, with quiet times in between.

-

Performance policy—Hardware low-power states are applied when resources are idle. Use the performance policy when:

- The system runs a mix of workloads with periods of idleness.

- The power savings from putting unallocated and idle resources into lower power states justifies an occasional negligible loss of performance.

-

Disabled policy—All hardware power states are set to full power. Use the disabled policy when:

- Guaranteed full performance is critical, such as for financial market trading, end-of-month accounting, or system data backups.

- The interaction between a particular workload and the performance policy results in less performance than you want.

The default platform power management policy is the performance policy.

Notes:

- To be presented with three platform policies on Oracle's SPARC T3 or SPARC T4 servers, you must run version 8.3 of the system firmware and version 3.0 of Oracle VM Server for SPARC.

- In the Performance policy on SPARC M7, T7, and S7 servers, hardware used by Oracle Solaris guests is not put in low-power by default. For information about how to override the default setting, see the “Oracle Solaris 11 Power Management Policies” section.

Managing Platform Power Policies

The platform policy is managed by the service processor (SP) under the Oracle Integrated Lights Out Manager (Oracle ILOM) /SP/powermgmt target. There are several ways you can set or change the platform policy: via the Oracle ILOM command line, the Oracle ILOM BUI, SNMP, or IPMI.

Oracle ILOM Command Line:

- Log in to the Oracle ILOM SP as a user with an administrative role.

-

Use the following commands at the Oracle ILOM prompt (->) to view the current policy and to change the policy, respectively:

Note: For a SPARC M5, M6, or M7 server, prepend

/Servers/PDomains/PDomain_<#>/to the target names, for example,/Servers/PDomains/PDomain_0/SP/powermgmt.-> show /SP/powermgmt policy -> set /SP/powermgmt policy=elastic -> set /SP/powermgmt policy=performance -> set /SP/powermgmt policy=disabled

Oracle ILOM BUI:

-

Connect to the SP from a web browser:

https://<SP-IP-address>.Note: For a SPARC M5, M6, or M7 server, from the upper left drop-down menu, choose the physical domain you wish to manage.

- Log in as superuser.

- Go to the Power Management -> Settings tab (shown in Figure 1).

- Select the power policy that you want to use.

- Save the policy setting.

Figure 1

SNMP:

- Log in to an SNMP-capable system with network access to the Oracle ILOM SP.

-

Use the following commands to view the current policy and to change the policy to performance (

3), elastic (4), and disabled (5), respectively:Note: For a SPARC M5, M6, or M7 server, insert

Domaininto the target name aftersunHwCtrland append the domain ID + 1. For example, for physical domain 2, changesunHwCtrlPowerMgmtPolicy.0tosunHwCtrlDomainPowerMgmtPolicy.3.# snmpget -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtPolicy.0 # snmpset -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtPolicy.0 = 3 # snmpset -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtPolicy.0 = 4 # snmpset -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtPolicy.0 = 5Note that SNMP uses the SNMP MIB called

SUN-HW-CTRL-MIB.

IPMI:

Use at least version 1.8.9 of the Oracle ILOM CLI. Use version 1.8.10.3 of ipmitool.

- Log in to an IPMI-capable system with network access to the Oracle ILOM SP.

-

Use the following commands to view the current policy and to change the policy, respectively:

Note: For a SPARC M5, M6, or M7 server, prepend

/Servers/PDomains/PDomain_<#>/to the target names, for example,/Servers/PDomains/PDomain_0/SP/powermgmt.# ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "show /SP/powermgmt" # ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "set /SP/powermgmt policy=performance" # ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "set /SP/powermgmt policy=elastic" # ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "set /SP/powermgmt policy=disabled"

Oracle Solaris 11 Power Management Policies

Normally the platform power management policy controls the behavior of all logical domains in the system. But you can override the platform policy in a specific Oracle Solaris 11 instance by configuring an Oracle Solaris power management policy.

Oracle Solaris 11 uses a switch and a knob to describe the power management policy:

- The

administrative-authorityswitch indicates whether the platform power management policy is applied. - The

time-to-full-capacity(TTFC) knob indicates how much performance loss can be tolerated. The value is the number of microseconds of latency that can be tolerated before resources must be performing at full speed or full capacity. Oracle Solaris uses this value to determine which power savings features to enable.

Oracle Solaris 11 power management is controlled by the System Management Facility (SMF) power service. The power service must be enabled (see svcadm(1M)) before you can configure the power management policy. This service is enabled by default, and it is managed via the poweradm command.

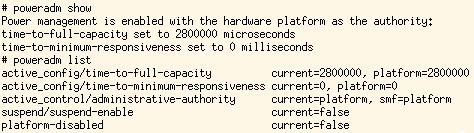

As superuser, you can use poweradm to get and set the policy. To see the current policy and the full set of poweradm properties, respectively, use the commands shown in Figure 2:

Figure 2

By default, administrative-authority is set to platform, which applies the platform power management policy. The platform policy is mapped to a TTFC value that enables only those power management features that meet the criteria described in "Platform Power Management Policies." When the platform policy is applied and the platform policy is set to disabled, the platform-disabled property will be set to true.

To apply the platform power management policy, use the following command:

# poweradm set administrative-authority=platform

You can override the platform policy by configuring your own TTFC value and setting administrative-authority to smf. To set a local policy that overrides the platform policy, run the following commands:

# poweradm set time-to-full-capacity=20000

# poweradm set time-to-minimum-responsiveness=0

# poweradm set administrative-authority=smf

The value you choose for time-to-full-capacity is in microseconds. The time-to-minimum-responsiveness value has no effect, but you must still set it before setting administrative-authority to smf.

To entirely disable power management, set administrative-authority to none, which sets to full power all components owned or shared by this OS:

# poweradm set administrative-authority=none

The suspend-enable property is ignored on SPARC platforms.

For more information about poweradm, see the poweradm man page.

Power Aware Dispatcher

The only power management feature in Oracle Solaris 11.1 on SPARC processor–based systems is the Power Aware Dispatcher (PAD). PAD manages the power states of the CPUs and is available on systems based on the SPARC T5, M5, and M6 processors. For more information about PAD, see "How Power Management Works."

Enable PAD by setting administrative-authority to platform and setting the platform policy to performance or elastic. The Logical Domains Manager sets the TTFC value based on the platform policy. The performance policy TTFC may be smaller the elastic policy TTFC, depending on the platform and Logical Domains Manager version. You can also enable PAD if you set administrative-authority to smf and time-to-full-capacity (TTFC) to a value that is greater than or equal to the value set by the platform in the performance or elastic policy.

A smaller TTFC might allow PAD to apply some, but not all, low-power states.

Note: PAD is not available prior to Oracle Solaris 11.1 and poweradm is not available on Oracle Solaris 10. In Oracle Solaris 10, power management is limited to device power management. For more information, see the "Oracle Solaris 10: Device Power Management" section of "Using Interfaces to Manage the Power Management Features" in "How to Use the Power Management Controls on SPARC Servers" on the Oracle Technical Network.

Power Capping

Power capping allows you to set an upper limit for the system power consumption, and it enforces that limit.

Soft power caps and hard power caps are supported. Soft power capping attempts to enforce a power cap regardless of whether it is achievable. Hard power capping guarantees a cap won't be exceeded, but it can be enabled only if the cap is achievable. Hard power capping is available only on systems based on the SPARC T5 processor.

Use power capping to curtail the total power consumption of the system, for example, to

- Control costs.

- Manage heat.

- Meet a power provider's request that consumption be curtailed due to high demand, such as during certain time periods in hot summer months.

Use hard power capping when

- The power limit you want to set is achievable.

- You want instantaneous power adjustments.

- You want to limit the power provisioned to the server.

Use soft power capping when

- The system doesn't support hard caps (as is true for systems based on the SPARC T3, T4, M5, and M6 processors).

- The power limit you want to set might be achievable only in some conditions (for example, with certain workloads or under certain ambient temperatures).

Managing Power Caps

The power capping budget is managed by the SP under the Oracle ILOM /SP/powermgmt/budget target. There are several ways to set or change the budget: via the Oracle ILOM command line, Oracle ILOM BUI, SNMP, or IPMI.

Oracle ILOM Command Line

- Log in to the Oracle ILOM SP as a user with an administrative role.

-

At the Oracle ILOM prompt (->), use the command shown in Listing 1 to view the current budget:

Note: For a SPARC M5, M6, or M7 server, prepend

/Servers/PDomains/PDomain_<#>/to the target names, for example,/Servers/PDomains/PDomain_0/SP/powermgmt/budget.-> show /SP/powermgmt/budget /SP/powermgmt/budget Targets: Properties: activation_state = disabled status = ok powerlimit = 1000 (watts) timelimit = 10 violation_actions = none min_powerlimit = 532 pendingpowerlimit = 1000 (watts) pendingtimelimit = 10 pendingviolation_actions = none commitpending = (Cannot show property) Commands: cd set showListing 1

The read-only powerlimit property specifies the maximum power in watts the system is allowed to consume. Change this value by setting the pendingpowerlimit property to a new value.

-> set /SP/powermgmt/budget pendingpowerlimit=1200

The read-only timelimit property determines how many seconds the power can be over the limit before the limit is considered violated. Change the value of the timelimit property by setting the pendingtimelimit property to a new value. Set pendingtimelimit to zero (0) to tell the system to use the hard power capping policy to limit the power.

-> set /SP/powermgmt/budget pendingtimelimit=0

Set pendingtimelimit to a non-zero value to use the soft power capping policy. Setting it to default allows the system to choose a value that is tuned to give the system sufficient time to notice an exceeded power limit and adjust the power consumption under normal conditions.

-> set /SP/powermgmt/budget pendingtimelimit=default

The read-only violation_actions property can be either none or hardpoweroff. hardpoweroff will power-off the system if the value specified for powerlimit is violated for longer than the time specified by timelimit. By default, violation_actions is none.

-> set /SP/powermgmt/budget pendingviolation_actions=none

Any pending values do not take effect until the commitpending property is set to true.

Example:

Issue the following five commands to display the power being consumed, set pendingpowerlimit to 900 watts, set pendingtimelimit to 0 to enable hard power capping, apply the pending values, and activate the budget, respectively:

-> show /SP/powermgmt actual_power

-> set /SP/powermgmt/budget pendingpowerlimit=900

-> set /SP/powermgmt/budget pendingtimelimit=0

-> set /SP/powermgmt/budget commitpending=true

-> set /SP/powermgmt/budget activation_state=enabled

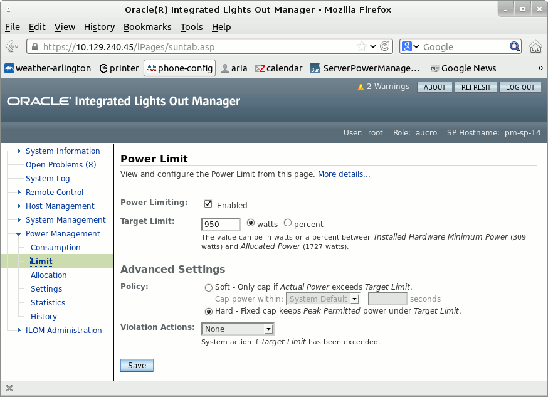

Oracle ILOM BUI

-

Connect to the SP from a web browser:

https://<SP-IP-address>.Note: For a SPARC M5, M6, or M7 server, from the upper left drop-down menu, choose the physical domain you wish to manage.

- Log in as superuser.

- Go to the Power Management -> Consumption tab to view the current power usage.

-

Go to the Power Management -> Limit tab (shown in Figure 3).

- Select Power Limiting to enable power capping.

- Specify the power limit in the Target Limit field.

- Select the Soft or Hard option.

- Click Save.

Figure 3

SNMP

- Log in to an SNMP-capable system with network access to the Oracle ILOM SP.

-

Use the following commands to view the power limit, set the pending power limit to 900 watts, set the pending time limit to 0 to enable hard power capping, apply the pending values, and activate the budget, respectively:

Note: For a SPARC M5, M6, or M7 server, insert

Domaininto the target name aftersunHwCtrland append the domain ID + 1. For example, for physical domain 2, changesunHwCtrlPowerMgmtBudgetPowerlimit.0tosunHwCtrlDomainPowerMgmtBudgetPowerlimit.3.# snmpget -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtBudgetPowerlimit.0 # snmpset -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtBudgetPendingPowerlimit.0 = 900 # snmpset -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtBudgetPendingTimelimit.0 = 0 # snmpset -v2c -cprivate <SP-IP-address> sunHwCtrlPowerMgmtBudgetCommitPending.0 = true # snmpset -v2c -cprivate <SP-IP-adddress> sunHwCtrlPowerMgmtBudget.0 = activated

Note that SNMP uses the SNMP MIB called SUN-HW-CTRL-MIB.

IPMI

Use at least version 1.8.9 of the Oracle ILOM CLI. Use version 1.8.10.3 of ipmitool.

- Log in to an IPMI-capable system with network access to the Oracle ILOM SP.

-

Use the following commands to view the current budget and to change the budget, respectively:

Note: For a SPARC M5, M6, or M7 server, prepend

/Servers/PDomains/PDomain_<#>/to the target names, for example,/Servers/PDomains/PDomain_0/SP/powermgmt/budget.# ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "show /SP/powermgmt/budget " # ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "set /SP/powermgmt/budget pendingpowerlimit=900" # ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "set /SP/powermgmt/budget pendingpowerlimit=0" # ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "set /SP/powermgmt/budget commitpending=true" # ipmitool -I lan -H <SP-IP-address> -U root sunoem cli "set /SP/powermgmt/budget activation_state=enabled"

Observing Power Consumption

Several power management interfaces provide visibility into the power being consumed by the system. Via these interfaces it is possible to observe the following:

- The overall platform power consumption

- Platform power consumption broken down by CPUs, memory, and fans

- On SPARC M5 and SPARC M6 servers, the power consumption of each physical domain

- The power consumption of each logical domain

You can observe not only the current consumption, but also rolling averages over the last 15, 30, or 60 seconds, and a history of power consumption over the last hour or 14 days.

Observing Platform Power Consumption

The SP monitors the power consumption of the system. You can observe the power consumption via the Oracle ILOM command line, Oracle ILOM BUI, SNMP, or IPMI. The Oracle ILOM BUI also presents graphs of power consumption.

Oracle ILOM Command Line

- Log in to the Oracle ILOM SP as a user with an administrative role.

-

At the Oracle ILOM prompt (->), use the command shown in Listing 2 to view the current power consumption:

Note: For a SPARC M5, M6, or M7 server, prepend

/Servers/PDomains/PDomain_<#>/to the target names, for example,/Servers/PDomains/PDomain_0/SP/powermgmt.-> show /SP/powermgmt /SP/powermgmt Targets: budget powerconf Properties: actual_power = 272 permitted_power = 1045 allocated_power = 1045 available_power = 1200 threshold1 = 0 threshold2 = 0 policy = performance Commands: cd set showListing 2

available_poweris the maximum power in watts the power supplies in this system are capable of supplying.allocated_poweris the maximum power in watts the physical resources in this system are capable of consuming.permitted_poweris the maximum allowed power in watts. By default, this value is the same as theallocated_powervalue, but thepermitted_powervalue will be reduced to thepowerlimitvalue set in the budget if an achievable hard power cap is set.actual_poweris the current power consumption in watts. -

Use the following commands at the Oracle ILOM prompt (->) to view power consumption history for the system, CPUs, memory, and fans, respectively:

Note: For a SPARC M5, M6, or M7 server, prepend

/Servers/PDomains/PDomain_<#>/HOSTto the target names to see the power consumption history for a single domain, for example,/Servers/PDomains/PDomain_0/HOST/VPS.-> show /SYS/VPS/history -> show /SYS/VPS_CPUS/history -> show /SYS/VPS_MEMORY/history -> show /SYS/VPS_FANS/history

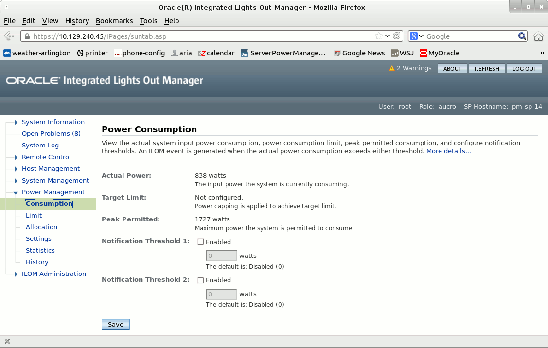

Oracle ILOM BUI

Via the Oracle ILOM BUI you can see the current power consumption, as shown in Figure 4.

Figure 4

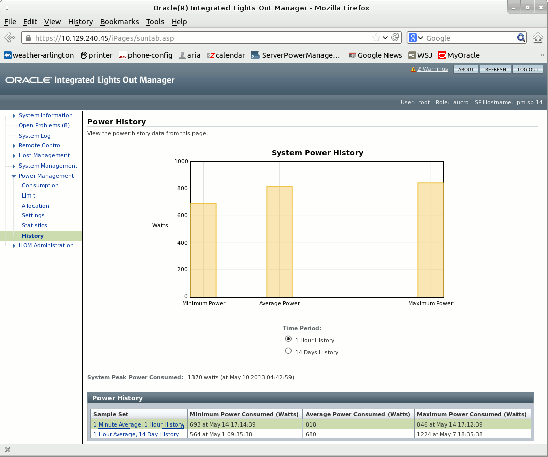

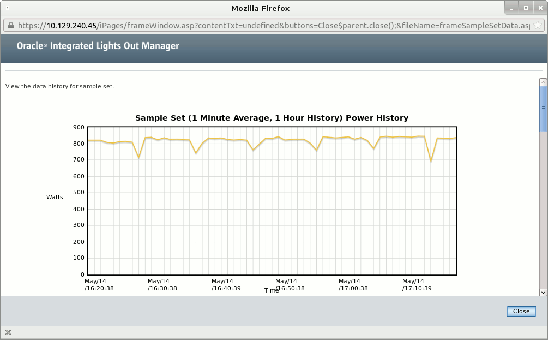

You can also see a history of the power consumption for the last hour or the last 14 days, as shown in Figure 5.

Figure 5

This graph in Figure 6 shows the last hour's worth of 1-minute power consumption averages:

Figure 6

SNMP

- Log in to an SNMP-capable system with network access to the Oracle ILOM SP.

-

Use the following commands to view the current system power consumption, available power and permitted power, respectively:

Note: For a SPARC M5, M6, or M7 server, insert

Domaininto the target name aftersunHwCtrland append the domain ID + 1. For example, for physical domain 2, changesunHwCtrlPowerMgmtActualPower.0tosunHwCtrlDomainPowerMgmtActualPower.3.# snmpwalk -v2c -cpublic <SP-IP-address> SUN-HW-CTRL-MIB::sunHwCtrlPowerMgmtActualPower # snmpwalk -v2c -cpublic <SP-IP-address> SUN-HW-CTRL-MIB::sunHwCtrlPowerMgmtAvailablePower # snmpwalk -v2c -cpublic <SP-IP-address> SUN-HW-CTRL-MIB::sunHwCtrlPowerMgmtPermittedPowerNote that SNMP uses the SNMP MIB called

SUN-HW-CTRL-MIB.

IPMI

- Log in to an IPMI-capable system with network access to the Oracle ILOM SP.

-

Use the following command to view power consumption history for the system, CPUs, memory, and fans:

# ipmitool -I lan -H <SP-IP-address> -U root sdr | grep VPS

Observing Logical Domain Power Consumption

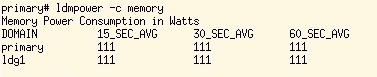

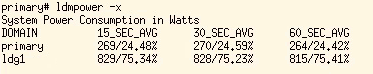

The ldmpower command is available in the control (primary) logical domain, where the Oracle VM Server for SPARC Logical Domains Manager runs. This command allows you to see the processor, memory, and system power consumption of each logical domain. You can also see the total system, processor, memory, and fan power consumption with this command.

You must be superuser or your login must be configured with a rights profile allowing you to run the ldmpower command.

With the ldmpower command, you can see the per-domain processor or memory power consumption and an estimate of the per-domain system power consumption based on an extrapolation of the processor and memory power consumption. You have the option of seeing 15-, 30-, and 60-second rolling averages or fixed-interval averages for the past hour or 14 days. Below are just a few examples of what is observable with ldmpower.

Figure 7 shows the rolling average per-domain processor power consumption for two domains:

Figure 7

Figure 8 shows the rolling average per-domain memory power consumption for two domains:

Figure 8

Figure 9 shows the rolling average per-domain system power consumption for two domains:

Figure 9

Figure 10 shows the total, processor, memory, and fan consumption for the system overall:

Figure 10

For more information about ldmpower, see the ldmpower man page.

Note: In order to use ldmpower on a SPARC T3 or T4 server, you must run version 8.3 of the system firmware and version 3.0.0.2 of Oracle VM Server for SPARC.

Use Cases and Scripts

This section shows how to automate the application of Oracle Solaris power management policies and how to monitor the frequency of CPUs.

Additional use cases covering how to automate the application of platform power management policies and power capping are covered in "Use Cases and Scripts" in the "How to Use Power Management Controls on SPARC Servers" article on the Oracle Technology Network.

Disabling Power Management in an Oracle Solaris Guest Domain During Trading Hours

You can use cron to disable power management in the Oracle Solaris 11 instance that runs trading operations from 10:00 to 16:00 each weekday, while allowing the platform power management policy to be applied to all other Oracle Solaris instances and to unallocated resources.

In the Oracle Solaris guest in question, simply add these lines to the root crontab:

# Disable power management at 10:00 M-F

0 10 * * 1,2,3,4,5 poweradm set administrative-authority=none >> /pm/poweradm.log 2>&1

# Enable the platform configured power management policy at 16:00 M-F

0 16 * * 1,2,3,4,5 poweradm set administrative-authority=platform >> /pm/poweradm.log 2>&1

Observing CPU Speed Changes

You can accurately monitor the power states being applied to your Oracle Solaris instance's CPUs by comparing the tick registers of the CPUs in each core against the wall-clock time. The tick register increments at the current effective clock rate, which is reduced to a slower rate when power management is applied (as described in "How Power Management Works").

Theclockrate.py Python script, which was written by an Oracle performance engineer, monitors the effective speed of the CPUs by comparing the tick rate against both the wall-clock time and an initial per-CPU clock rate collected via the following kstat command:

kstat -p cpu_info:::current_clock_Hz

In the example shown in Listing 3, clockrate.py monitors one CPU from each evenly numbered core. You can see the frequency of the cores drop when the elastic policy is applied.

clockrate.py --cpu=0,16,32,48,64,80,96,112,128,144,160,176,192,208,224,240

2013-06-20 08:17:19 PSET CHIP CORE CPU Mhz(r) Mhz(m) Mhz(%) t=100

2013-06-20 08:17:19 -1 0 3074 0 3600 3592 100% <-- disabled policy applied

2013-06-20 08:17:19 -1 0 3082 16 3600 3597 100%

2013-06-20 08:17:19 -1 0 3090 32 3600 3597 100%

2013-06-20 08:17:19 -1 0 3098 48 3600 3597 100%

2013-06-20 08:17:19 -1 0 3106 64 3600 3597 100%

2013-06-20 08:17:19 -1 0 3114 80 3600 3597 100%

2013-06-20 08:17:19 -1 0 3122 96 3600 3597 100%

2013-06-20 08:17:19 -1 0 3130 112 3600 3596 100%

2013-06-20 08:17:19 -1 1 3138 128 3600 3596 100%

2013-06-20 08:17:19 -1 1 3146 144 3600 3597 100%

2013-06-20 08:17:19 -1 1 3154 160 3600 3597 100%

2013-06-20 08:17:19 -1 1 3162 176 3600 3597 100%

2013-06-20 08:17:19 -1 1 3170 192 3600 3597 100%

2013-06-20 08:17:20 -1 1 3178 208 3600 3597 100%

2013-06-20 08:17:20 -1 1 3186 224 3600 3597 100%

2013-06-20 08:17:20 -1 1 3194 240 3600 3597 100%

2013-06-20 08:17:19 PSET CHIP CORE CPU Mhz(r) Mhz(m) Mhz(%) t=100

2013-06-20 08:17:20 -1 0 3074 0 3600 3596 100%

2013-06-20 08:17:20 -1 0 3082 16 3600 3597 100%

2013-06-20 08:17:20 -1 0 3090 32 3600 3597 100%

2013-06-20 08:17:20 -1 0 3098 48 3600 3597 100%

2013-06-20 08:17:20 -1 0 3106 64 3600 3597 100%

2013-06-20 08:17:20 -1 0 3114 80 3600 3597 100%

2013-06-20 08:17:20 -1 0 3122 96 3600 3597 100%

2013-06-20 08:17:20 -1 0 3130 112 3600 3596 100%

2013-06-20 08:17:20 -1 1 3138 128 3600 3596 100%

2013-06-20 08:17:20 -1 1 3146 144 3600 3597 100%

2013-06-20 08:17:20 -1 1 3154 160 3600 3597 100%

2013-06-20 08:17:20 -1 1 3162 176 3600 3597 100%

2013-06-20 08:17:20 -1 1 3170 192 3600 3597 100%

2013-06-20 08:17:21 -1 1 3178 208 3600 2621 73% <-- elastic policy applied

2013-06-20 08:17:21 -1 1 3186 224 3600 229 6%

2013-06-20 08:17:21 -1 1 3194 240 3600 3212 89%

2013-06-20 08:17:19 PSET CHIP CORE CPU Mhz(r) Mhz(m) Mhz(%) t=100

2013-06-20 08:17:21 -1 0 3074 0 3600 455 13%

2013-06-20 08:17:21 -1 0 3082 16 3600 3597 100%

2013-06-20 08:17:21 -1 0 3090 32 3600 3597 100%

2013-06-20 08:17:21 -1 0 3098 48 3600 454 13%

2013-06-20 08:17:21 -1 0 3106 64 3600 3597 100%

2013-06-20 08:17:21 -1 0 3114 80 3600 454 13%

2013-06-20 08:17:21 -1 0 3122 96 3600 454 13%

2013-06-20 08:17:21 -1 0 3130 112 3600 3597 100%

2013-06-20 08:17:21 -1 1 3138 128 3600 114 3%

2013-06-20 08:17:21 -1 1 3146 144 3600 117 3%

2013-06-20 08:17:21 -1 1 3154 160 3600 2081 58%

2013-06-20 08:17:21 -1 1 3162 176 3600 118 3%

2013-06-20 08:17:22 -1 1 3170 192 3600 2076 58%

2013-06-20 08:17:22 -1 1 3178 208 3600 115 3%

2013-06-20 08:17:22 -1 1 3186 224 3600 121 3%

2013-06-20 08:17:22 -1 1 3194 240 3600 933 26%

2013-06-20 08:17:19 PSET CHIP CORE CPU Mhz(r) Mhz(m) Mhz(%) t=100

2013-06-20 08:17:22 -1 0 3074 0 3600 123 3%

2013-06-20 08:17:22 -1 0 3082 16 3600 114 3%

2013-06-20 08:17:22 -1 0 3090 32 3600 931 26%

2013-06-20 08:17:22 -1 0 3098 48 3600 122 3%

2013-06-20 08:17:22 -1 0 3106 64 3600 934 26%

2013-06-20 08:17:22 -1 0 3114 80 3600 114 3%

2013-06-20 08:17:22 -1 0 3122 96 3600 2036 57%

2013-06-20 08:17:22 -1 0 3130 112 3600 1288 36%

2013-06-20 08:17:22 -1 1 3138 128 3600 228 6%

2013-06-20 08:17:22 -1 1 3146 144 3600 114 3%

2013-06-20 08:17:22 -1 1 3154 160 3600 931 26%

2013-06-20 08:17:23 -1 1 3162 176 3600 123 3%

2013-06-20 08:17:23 -1 1 3170 192 3600 934 26%

2013-06-20 08:17:23 -1 1 3178 208 3600 114 3%

2013-06-20 08:17:23 -1 1 3186 224 3600 120 3%

2013-06-20 08:17:23 -1 1 3194 240 3600 934 26%

Listing 3

How Power Management Works

This section describes the hardware power savings capabilities of the SPARC T5, M5, M6, M7, and S7 servers and how they are controlled by software.

Hardware Power States

SPARC T5 processors contain 16 cores, SPARC M5 processors contain 6 cores, and SPARC M6 processors contain 12 cores. Each core contains eight strands (hardware execution units). Each strand is presented to Oracle Solaris as a CPU. Communication between processors is provided by coherency links. Processors and coherency links can both be put into lower-power states, as described below.

SPARC M7 processors contains 8 SPARC cache clusters with each cache cluster containing 4 cores. Each core has 8 strands similar to previous SPARC processors. The Dynamic Voltage and Frequency Scaling (DVFS) and clock cycle skip can independently be applied to each cache cluster. SPARC S7 processors have only 2 cache clusters.

- Processor DVFS. DVFS is a mechanism through which the processor's clock frequency is adjusted, and the voltage applied to the processor is adjusted to the level needed at that frequency. This is a particularly effective form of power savings because voltage adjustments have a larger impact on power consumption than mere frequency changes. Software uses DVFS for power savings. A field-programmable gate array (FPGA) on the CPU board also uses DVFS to throttle a processor when it is in an over-current or over-temperature condition.

- Core (Core-Pair) Clock Cycle Skip. Clock Cycle Skip is a mechanism through which instruction execution is suppressed during some of a core's clock cycles. This reduces the effective clock frequency, which reduces the processor power consumption. Each SPARC M5 core can be configured to skip up to seven of every eight clock cycles. On SPARC T5 and SPARC M6 processors, each consecutive pair of cores can be configured to skip up to seven of every eight clock cycles. (For simplicity, the remaining text will refer only to cores, not to core pairs.)

- Coherency Link Scaling (SPARC T5-2 servers only). On SPARC T5-2 systems, there are four coherency links between the two processors. Coherency traffic flows across these links to strands, I/O, caches, and memory on the other processor. It is possible to power-off two of the four links and reroute all traffic onto the remaining two links.

Power Management Software

Oracle Solaris 11.1 guest domains directly calculate the desired power states for CPU resources (cores and processors) they share.

Note: A SPARC T3 or T4 server must run version 8.2 of the system firmware and version 2.2 of Oracle VM Server for SPARC to enable this capability in Oracle Solaris 11.1.

The Logical Domains Manager calculates appropriate power states for unused CPU resources and resources used by Oracle Solaris 10 guest domains. The Logical Domains Manager and each Oracle Solaris 11.1 guest domain request that the hypervisor applies their desired state.

The hypervisor is a privileged entity and is the only one with direct control over power states. The hypervisor arbitrates among requests on shared resources, and applies the highest performing power state among the requested states.

Figure 11

Platform Policy Software

The Logical Domains Manager polls Oracle ILOM for the platform policy and sends the platform policy to guest domains running Oracle Solaris 11. The Logical Domains Manager also applies the platform power management policy to resources that are not bound to any logical domain and on behalf of Oracle Solaris 10 guest domains (which have no support for SPARC power management).

Here is how the Logical Domains Manager makes use of the hardware power features to manage power.

- Elastic and performance policy applied to unused CPU resources. When the elastic or performance policy is enabled, the Logical Domains Manager applies the lowest Clock Cycle Skip level to any core with no strands allocated to a guest domain. It applies the lowest DVFS level to any processor with no strands allocated to a guest domain.

- Elastic policy applied to in-use CPU resources. When the elastic policy is enabled, the Logical Domains Manager uses a combination of the processor DVFS and Core Clock Cycle Skip features to keep the CPU utilization of each Oracle Solaris 10 guest within a target utilization range. The Logical Domains Manager selects a DVFS frequency that is sufficient to address the utilization needs of all Oracle Solaris 10 guest domains sharing the processor. The Logical Domains Manager chooses a cycle skip ratio that is sufficient to address the utilization needs of all Oracle Solaris 10 guest domains sharing the core. The Logical Domains Manager sends all calculated DVFS and cycle skip states to the hypervisor, which arbitrates among requests from multiple sources.

- Elastic policy applied to coherency links (SPARC T5-2 server only). When the elastic policy is enabled, the Logical Domains Manager notifies the hypervisor that the number of coherency links in use between the two processors can be reduced from four to two links when the coherency traffic between the two processors is light. The hypervisor uses hardware counters to determine the load and adjusts the number of links as the load changes.

Oracle Solaris 11.1 Policy Software

When power management is enabled on Oracle Solaris 11.1 with a sufficiently large TTFC value (as discussed in "Oracle Solaris 11 Power Management Policies"), this enables PAD. PAD is an enhancement to the Oracle Solaris scheduler code that makes use of CPU power states in two ways:

- P-states: When the scheduler determines that only transient threads are queued to a core's CPUs, it asks the hypervisor to put the core into the lowest effective clock frequency allowed by the TTFC value. The arrival of additional threads onto the queue for the core's CPUs causes the scheduler to return the core to full power.

- C-states: When a CPU has no work, it enters halt (C1 C-state). When the last CPU in a core prepares to halt due to having no work, the scheduler predicts whether the core will remain halted for a while based on past behavior. If so, it asks the hypervisor to put the core into the lowest effective clock frequency allowed by the TTFC value. This is the C2 C-state. An interrupt returns the core back to full power (unless P-states have also requested low power).

When power management is disabled on Oracle Solaris 11.1, Oracle Solaris notifies the Logical Domains Manager, which then prevents low-power states on shared components. (Coherency links on SPARC T5-2 servers are the only shared components with power states.)

The powertop Tool

powertop is an Oracle Solaris command that provides visibility into the behavior of PAD. powertop shows the percentage of time PAD has requested the hypervisor to apply each P-state and C-state. Figure 12 shows an example of the output from powertop on an idle system:

Figure 12

This example shows that PAD is requesting the slowest P-state (rather than the C2 C-state) most of the time based on the level of idleness observed on this system.

Note that the actual hardware state might be different than what PAD requests. It might be higher if the resource is shared by a guest requiring higher performance. It might be lower if a power cap has been applied or if the processor is throttled by the CPU board's FPGA. To monitor the actual hardware state, monitor the CPU tick registers as described in "Observing CPU Speed Changes."

Power Capping Software

- Soft Power Capping. The Logical Domains Manager uses processor DVFS to achieve a power cap. When soft power capping is enabled, the SP monitors the system power consumption and notifies the Logical Domains Manager when it must reduce power or is allowed to increase power. Based on this notification, the Logical Domains Manager will either increase or decrease the maximum DVFS level allowed for all processors in the system. It notifies the hypervisor of this maximum. When a maximum DVFS frequency is selected by power capping, the hypervisor prevents higher frequencies from being set by the Logical Domains Manager or Oracle Solaris, regardless of the power management policy.

- Hard Power Capping. An FPGA on each CPU board is connected to a "back door" interface on the processors that allows it to throttle the DVFS frequency on those processors. When hard power capping is enabled, Oracle ILOM configures the FPGA with the power limit. The FPGA monitors the system power consumption, and throttles the DVFS frequency when the power limit is exceeded. When the power falls below the limit, the FPGA removes the throttling. Hard power capping enforces only power limits that can be achieved in all circumstances.

Example: SPARC T5-2 Server with Two Oracle Solaris 11 Instances

We measured a SPARC T5-2 system with 255 gigabytes of memory, running two instances of Oracle Solaris 11. Figure 13 compares the power consumption in the disabled, performance, and elastic policies.

Figure 13

Figure 13 shows that when this system is idle and the disabled policy is in effect, the system consumes about 860 watts of power. With the elastic policy, the system consumes about 625 watts, saving 27 percent of the power consumed with the disabled policy.

The graph also shows that when a workload is run while the elastic policy is enabled, the system quickly adjusts the power states of the hardware components to provide full performance. No power is saved in the elastic policy while the workload runs, because it takes advantage of the full performance of the hardware.

See Also

- Watch a demonstration of the power management features on a SPARC T5-2 system.

- For more information about managing power on SPARC M5 physical domains, see the white paper "SPARC M5-32 Server Architecture."

- For more information about how to use SNMP and IPMI to manage Oracle ILOM, see "Appendix A: Accessing and Configuring the Interfaces" in the "How to Use the Power Management Controls on SPARC Servers" article on the Oracle Technology Network and see the Oracle ILOM Protocol Management Reference for SNMP and IPMI.

- For more information about the Oracle ILOM budget interface, see "Power Budget as of Oracle ILOM 3.0.6 for Server SPs" in the Oracle Integrated Lights Out Manager (ILOM) 3.0 HTML Documentation Collection.

- For more information about the Oracle ILOM power usage interfaces, see the "System Power Consumption Metrics" section of the Oracle Integrated Lights Out Manager (ILOM) 3.0 HTML Documentation Collection.

- For details about the hardware features of Oracle's SPARC T3 and SPARC T4 servers, see "How Power Management Works" in the "How to Use the Power Management Controls on SPARC Servers" article on the Oracle Technical Network.

About the Author

Julia Harper has been at Sun and Oracle for almost 20 years, with experience ranging from implementing drivers for low latency interconnects to designing integrated OS and service processor fault management solutions. For the past 6 years, she has led the effort to provide power management capabilities in the Oracle ILOM SP firmware and SPARC server software, and she is currently the architect and technical lead for the SPARC power management software group.

Revision 1.1, 08/06/2013:

|

|

Revision 1.2, 08/20/2013:

|

|

Revision 1.3, 01/03/2014:

|

|

Revision 1.4, 12/07/2016:

|