| |

|

DECEMBER 2018

|

|

|

| |

| |

Your Monthly PaaS Updates |

|

| |

|

| |

Oracle PaaS Partner Community Newsletter

December 2018

|

|

|

|

|

| |

| |

|

Table of Contents |

|

Announcements & Community Section |

| > |

Presentations Oracle OpenWorld 2018 |

| > |

Technology and Industry Innovations and Demos |

| > |

Cloud trials & community update – PaaS Partner Community Webcast December 18th 2018 |

| > |

PaaS Partner YouTube Update December 2018 |

| > |

Oracle Developer Meetups in Utrecht, Lille, Brussels, London, Lisbon, Madrid, Cologne and Oslo |

| > |

PaaS free trial accounts ICS, SOA CS, API CS, PCS, IoT and PaaS for SaaS |

|

Integration Section |

| > |

Free eBook Cloud Integration & API Management for dummies |

| > |

When the Cracks Begin to Show On Designing Microservices |

| > |

API Platform Cloud Service Training, Samples and Demos |

| > |

Managing HTTP Headers with Oracle API Platform |

| > |

Provisioning Oracle API Platform Gateway Nodes using Terraform and Ansible on AWS |

| > |

Oracle Integration Cloud: Recommend Feature Demo |

| > |

Opportunity to Order Workflow: Integrating Salesforce with NetSuite - Part 2 |

| > |

OIC Connectivity Agent Installation Pointers |

| > |

OIC --> VB CS --> Service Connections. Triggering an Integration from VB CS |

| > |

First steps with Oracle Self Service Integration Cloud |

| > |

Difference between File and FTP adapter in Oracle Integration Cloud |

| > |

The Power of High Availability Connectivity Agent |

| > |

Using ANT to investigate JCA adapters |

| > |

How to query your JMS over AQ Queues |

| > |

Oracle 18c Certification for Fusion Middleware 12c Release 2 |

|

Business Process Management Section |

| > |

Make Orchestration Better with RPA |

|

Architecture & User Experience & Innovation Section |

| > |

Our new product - Katana 18.1 (Machine Learning for Business Automation) |

| > |

Developing an IoT Application Powered with Analytics |

| > |

Oracle Tech Talk: Blockchain: Beyond the Hype with a Developer |

| > |

Machine Learning — Getting Data Into Right Shape |

|

Additional new material PaaS Community |

| > |

Top tweets PaaS Partner Community – December 2018 |

| > |

Training Calendar PaaS Partner Community |

| > |

My private Corner Merry Christmas |

|

|

|

| |

| |

Announcements & Community Section |

|

|

|

|

| |

Hubs have helped dozens of businesses realize their vision by bringing them to life. Below are some of the catalogued applications and projects the Hubs have helped put into production for companies just like yours. Let us work with you to get your next project off the ground and into the cloud.

Back to top |

|

|

|

| |

| |

Attend our December edition of the PaaS Partner Community Webcast live on December 18th 2018 16:30 CET.

The PaaS Partner Community is your single point of PaaS resources. Information include enablement, hands-on workshops & training material, sales kits including presentations in ppt. format and marketing campaign material. For free registration please visit www.oracle.com/goto/emea/soa

Schedule:

Tuesday December 18th 16:30 - 17:30 CET

Watch live here.

Missed our PaaS Partner Community Webcast? – watch the on-demand versions:

For the latest information please visit Community Updates Wiki page (Community membership required).

Back to top |

|

|

|

| |

| |

|

|

| |

| |

Want to learn more about developing Enterprise-grade Cloud Native applications on the Oracle Cloud Platform, covering topics like Microservices Architecture, developing in Node, Python and PHP, using Low Code development tools to build Mobile apps, and much more? Join the Oracle Developer Meetup groups if you want to follow Oracle’s solutions in this area, or participate in the events and hands-on labs we organize:

Please let us know in case you want to run an event at one of this location or you want to start your local meetup. We are looking forward to support you and sponsor the event with pizza and beer!

Back to top |

|

|

|

| |

| |

|

|

| |

|

|

| |

|

|

| |

| |

No one has achieved success with microservices just by talking about them. Unfortunately, many organizations spend a lot of time on exactly that, debating how to approach microservices. It is as though there is one perfect approach to designing and working with microservices that needs only be uncovered. In actual fact, there is no such definitive solution; even if there were, it would hold true only until changes in the organization, business objectives, technology frameworks and regulations made adjustments necessary.

It is tempting—just as it was a decade ago with SOA Web Services—to spend a lot of time and energy on identifying microservices. Creating an exhaustive overview of all microservices, defining the exact scope and interface of each, is not feasible and is not a smart investment of time. It would be a lot of work, and that work would never be complete. The definition of microservices is not an end in itself and giving in to this temptation represents a serious risk. Microservices are an instrument for achieving sustained business agility in a changing world of functional and non-functional requirements and evolving technical, political, economic, and legal parameters. Microservices cannot be defined once and for all, and they should not have to be. As architects and developers we are agile and flexible. We embrace change in all aspects of our IT organizations.

Here’s another organizational risk familiar from the SOA era: starting with an exclusive focus on the technology for implementing microservices and on the microservices platform, the underlying platform for eventually running the microservices (that do not even exist yet and for which no requirements are yet known). It is all too easy to spend time on this seemingly useful exercise and, after months of investigation and selection and architecting, to end up with an impractical, oversized and over-engineered platform – and no running microservices. Such discussions slow down the process of microservices adoption, obstruct the view of the essential challenges, and set up an organization for disappointing results (if not outright frustration).

A third category of risk is to just start building microservices without a clear business need for or objective with a microservices architecture or, even worse, without really understanding what a microservices architecture entails from an organizational perspective. The operative keyword being overlooked: DevOps.

This article provides some insights and guidelines that can help propel teams of architects beyond discussions and into action. Perhaps it can also help establish some architecture guidelines, such as the importance of domain design.

What do we want to achieve with microservices?

When discussing microservices, we must remember what our objectives are. Microservices are not the objective; they are merely the means. Microservices are meant to help us with those objectives and if they do not do so, we neither need nor want them. Read the complete article here.

Back to top |

|

|

|

| |

| |

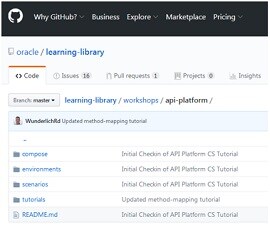

This is the place to learn all about API Platform! Here you will find our repository of tutorials that are aimed to help you on your path to be an expert.

What is a tutorial?

A tutorial is a small lesson that walks you through the process of performing a particular task. For example Designing an API

Note: Whenever there is a link, open it in a new tab (right-click->"Open Link in New Tab"). This way you will maintain your place this lab guide without having to re-orient yourself after completing a task from a linked tutorial, etc

Think of a tutorial as the how to perform a task

What is a scenario?

A scenario the story or use-case that provides a path through the tutorials. Think of a scenario as the what and why

Suggested approach

The overall structure of this training can be thought of as

- Scenario

- Tutorials

- Screencasts (coming soon)

As you begin with the scenario, you may visit the linked tutorials for guidance, but we suggest you try to push yourself to use the application with only just enough guidance that you might need. Don't blindly follow the steps of a tutorial. As a matter of fact, there are some tasks that naturally get repeated, such as deploying an API as you make changes. The first time you deploy, you may need to use the tutorial, but the second and subsequent times, try to do it without the tutorial so you can test yourself and confirm if you are comprehending what this training is teaching you.

We plan to record each tutorial in a short screencast so that if you need to see it being done, or you simply want to validate that you've followed the task correctly, you can use these videos. Again, don't hesitate to push yourself by attempting to complete the task, then use the tutorial to validate your understanding.

Getting Started

- Choose the environment you will use

- You can visit Environments to learn about getting a free trial of Oracle Cloud.

- Visit the scenarios and choose which one you want to use. There is only one right now, so the choice is easy!

Get the free training material here.

Back to top |

|

|

|

| |

| |

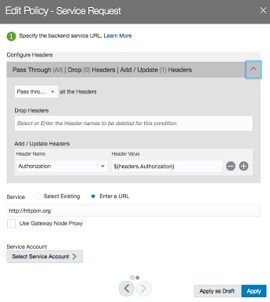

Imagine you have a service that is secured with Basic Auth or better yet OAuth2 and you would like to leverage the complete capabilities of an API Platform so you choose to create an API and deploy it to a gateway running in front of your service.

When you call your service directly from within your internal network, everything works, providing you include the appropriate Authorization header that your service expects. However, when you call the API endpoint on your gateway, the endpoint you will make available to your consumers, it does not work. You are passing everything as your original call, you've just modified the end-point to point to your gateway load balancer address and API end-point. What happened?

Well, most of the headers you pass to the gateway will simply pass through by default, with the exception of Authorization. Here is an example of a real simple API I created.

This API receives a request to the "echo" end-point and simply passes it to httpbin.org so we can see the result. So, as we can see, I am not really including any security policies in my API. I am choosing to leave that to the back-end service in this case. What happens if I call this API passing with an Authorization header. As you can see below, it is not included in the headers.

This is because the gateway is an authorization and policy enforcement engine and in most cases, when we validate the user at the gateway, we do not want to pass that header to the back-end systems. But what if we do want to pass that header? It turns out this is quite simple. We add the header to our Service Request policy. Read the complete article here.

Back to top |

|

|

|

| |

| |

When using Oracle Autonomous API Platform, an API gets deployed to a logical gateway. The logical gateway consists of one or more nodes which are instances of the runtime, installed on physical machines, virtual machines, or cloud infrastructure. The gateway nodes handle the processing of the API requests, but a load balancer is still required to distribute traffic between the nodes. When performance becomes an issue, more nodes can be added to increase throughput. Providing an automated way to manage nodes ensures consistency of configurations and the ability to easily add and remove nodes.

The gateway nodes can be installed on-premise or in the cloud, and are not restricted to the Oracle cloud. This allows for customers who are already using AWS to host their micro-services, to use Oracle's API Platform platform to be able to monitor and expose their APIs on a central location. The API Platform portal, provides a central location deploy, activate, deprecate, and secure APIs while having complete visibility of the usages and KPI monitoring.

In this blog, I'll describe how I've created an automated way to provision, configure, and register new API gateway nodes, running on Amazon EC2 into the API Platform using Terraform and Ansible.

Technologies used (and links for more help): APICS: This the Oracle API Platform Cloud Service. Read the complete article here.

Back to top |

|

|

|

| |

| |

|

|

| |

| |

In our previous post, we demonstrated how Oracle Integration Cloud can automate your 'Opportunity to Order' workflow to help you achieve synchronization between your Salesforce and NetSuite applications. In this post, I will show you how easy it is to set up this integration flow between Salesforce and NetSuite.

The setup begins with the creation of individual connections for Salesforce and NetSuite using Oracle Integration Cloud's designer console. First add the authentication credentials to access your application environments. Once the connections are saved and tested, we proceed to the next steps of creating an integration process flow through drag and drop, configuring the adapters, and defining the mapping. Finally a single click activates your integration to achieve bi-directional synchronization between Salesforce and NetSuite.

Watch this video created by the Oracle Learning Library Team (YouTube channel) for a complete demo of the steps involved in using Integration Cloud Service to setup and configure your 'Opportunity to Order' workflow between Salesforce and NetSuite. Read the complete article here.

|

Back to top |

|

|

|

|

| |

|

|

| |

| |

The connectivity agent has been a feature of Oracle’s integration cloud strategy from the beginning to address the challenge of the cloud/on-premise integration pattern. However, the implementation of the agent differs between Oracle Integration Cloud Service (ICS) and Oracle Integration Cloud (OIC). With both offerings, the pattern for setup remains the same:

- Create Agent Group in ICS/OIC Console

- Download the connectivity agent installer

- “Install” the agent on an on-premise machine using the Agent Group ID from step 1 (this registers the agent with ICS/OIC)

- Verify that the agent is communicating with ICS/OIC via the integration console

However, step 3 differs dramatically between ICS and OIC. With ICS, the installation process resulted in a WebLogic Server (WLS) Single-Server configuration (i.e., all-in-one WLS server). Although the setup for the ICS agent has been optimized for an easy installation experience, the end result is fairly heavyweight. Now looking at how the agent is installed on OIC, it is simply a jar file that is kicked off using java -jar connectivityagent.jar. The end result is “behavior wise” is the same, but the footprint and experience from a setup/configuration perspective is radically different. The rest of this blog will focus on what happens when the OIC agent is “installed” and details that may not be obvious from the on-line documentation that can result in some “why does this not work” head scratching.

OIC Connectivity Agent High-Level Installation Steps

- Create an Agent Group in the OIC Console

- Download the Connectivity Agent zip file from the OIC Console

- Unzip the contents of the zip file on the on-premise agent machine

- Update the InstallerProfile.cfg with the details of the OIC environment and on-premise network

- Run the agent using java -jar connectivityagent.jar

Please refer to the OIC Connectivity Agent on-line documentation for the details associated with the steps mentioned above:

https://docs.oracle.com/en/cloud/paas/integration-cloud/integrations-user/managing-agent-groups-and-connectivity-agent.html

OIC Connectivity Agent Installation Experience

Once the zip file is downloaded from the OIC console and unzipped on the agent machine, you will see something like the following directory structure (as of 18.4.3): Read the complete article here.

Back to top |

|

|

|

| |

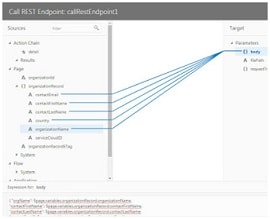

When you want to expose business objects from an external source in your visual application, you can add and manage connections to sources in the Service Connections pane of the Artifact Browser.

Ok, so this allows us to bring external functionality into VB CS.

The previous post showed my Organization Business Object.

I also have an Integration that creates Organizations in Service Cloud.

This Integration is exposed via REST. Read the complete article here.

Back to top |

|

|

|

| |

An important part of enabling optimal use of SaaS applications is integrating various functions in said applications. Events in one application need to have an effect in others. From simple practical matters such as “send an email when a specific type of file was uploaded into a certain Dropbox or OneDrive folder” or “Update a Google Document when a IRA issue is created” to more profound actions as “When a new lead is added to Oracle Sales Cloud, a new message is posted in a Slack channel” or “When an Eloqua Account is added, create same account Oracle Sales Cloud.”

Oracle Self Service Integration Cloud provides a framework for periodically polling a wide range of business applications out of the box as well as any application you add yourself (as long as the application can be polled through calls to a REST API). Any records retrieved in a polling action can be used to trigger actions in other applications. SSI can perform some logic (filter, loop, conditional execution as well as some calculation and conversion) and create a request message to send to a target application. Many recipes are available out of the box, and more can easily be created for all know business applications as well as for those we add ourselves.

Note that SSI will be the foundation for a new Custom Adapter development kit for Oracle Integration Cloud; apparently this it will support a low code, graphical experience with drag and drop for easy creation of adapters.

In this article a few first impressions with SSI.

Step 1: provision an SSI instance

From the Cloud Dashboard, I have opened the Service Console for SSI. Here I have selected the option to create a new instance. Read the complete article here.

Back to top |

|

|

|

| |

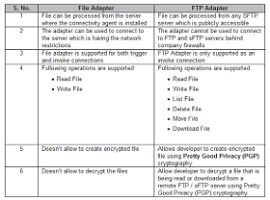

There are two inbuilt adapters offered by Oracle Integration Cloud. One is File and another one is FTP. Users often confused when to use File and when to use FTP adapter and what is the actual difference between these two adapters. The common idea behind these two adapters is to handle File-based processing in the integrations. We are writing this post to describe what is the actual difference between these two adapters? Read the complete article here.

Back to top |

|

|

|

| |

High Availability with Oracle Integration Connectivity Agent

You want your systems to be resilient to failure and within Integration Cloud Oracle take care to ensure that there is always redundancy in the cloud based components to enable your integrations to continue to run despite potential failures of hardware or software. However the connectivity agent was a singleton until recently. That is no longer the case and you can now run more than one agent in an agent group.

Of Connections, Agent Groups & Agents

An agent is a software component installed on your local system that "phones home" to Integration Cloud to allow message transfer between cloud and local systems without opening any firewalls. Agents are assigned to agent groups which are logical groupings of agents. A connection may make use of an agent group to gain access to local resources.

The feature flag oic.adapter.connectivity-agent.ha allows two agents per agent group. This provides an HA solution for the agent, if one agent fails the other continues to process messages.

Agent Networking

Agents require access to Integration Cloud using HTTPS, note that the agent may need to use a proxy to access Integration Cloud. This allows them to check for messages to be delivered from the cloud to local systems or vice versa. When using multiple agents in an agent group it is important that all agents in the group can access the same resources across the network. Failure to do this can cause unexpected failure of messages. Read the complete article here.

Back to top |

|

|

|

| |

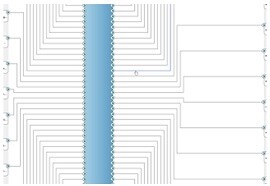

My current customer has a SOA Suite implementation dating from the 10g era. They use many queues (JMS serves by AQ) to decouple services, which is in essence a good idea.

However, there are quite a load of them. Many composites have several adapter specifications that use the same queue, but with different message selectors. But also over composites queues are shared.

There are a few composites with ship loads of .jca files. You would like to replace those with a generic adapter specification, but you might risk eating messages from other composites. This screendump is an anonymised of one of those, that actually still does not show every adapter. They're all jms adapter specs actually. Read the complete article here.

Back to top |

|

|

|

| |

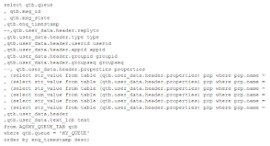

At my current customer we use queues a lot. They're JMS queues, but in stead of Weblogic JMS, they're served by the Oracle database.

This is not new, in fact the Oracle database supports this since 8i through Advanced Queueing. Advanced Queueing is Oracle's Queueing implementation based on tables and views. That means you can query the queue table to get to the content of the queue. But you might know this already.

What I find few people know is that you shouldn't query the queue table directly but the accompanying AQ$ view instead. So, if your queue table is called MY_QUEUE_TAB, then you should query AQ$MY_QUEUE_TAB. So simply prefix the table name with AQ$. Why? The AQ$ view is created automatically for you and joins the queue table with accompanying IOT tables to give you a proper and convenient representation of the state, subscriptions and other info of the messages. It is actually the supported wat of query the queue tables.

A JMS queue in AQ is implemented by creating them in queue tables based on the Oracle type

sys.aq$_jms_text_message type.

That is in fact a quite complex type definition that implements common JMS Text Message based queues. There are a few other types to support other JMS message types. But let's leave that.

Although the payload of the queue table is a complex type, you can get to its attributes in the query using the dot notation. But for that it is mandatory to have a table shortname and prefix the view columns with the table shortname. Read the complete article here.

Back to top |

|

|

|

| |

|

|

|

|

Nobody can deny, that when used correctly, RPA has the potential of providing a great ROI. Specially in situations where we are trying to automate manual no value added tasks as well as used as a mechanism to integrate with systems of information that do not have headless way (for example no APIs or Adapters if you are using an integration broker tool) to interact with them.

I would like to start this article with a simple example. Imagine for a second, an approval business process where a Statement of Work (SOW) needs to be approved by several individuals within an organization (consulting manager to properly staff project, finance manager to make sure project is viable). Once the approvals are done, the SOW should be uploaded and associated to an opportunity in this company's CRM application (where all customer information is centrally located). At the core of this business process, there is orchestration that coordinates people approvals and should also integrate with the CRM application to upload the SOW to the customer opportunity. The diagram below illustrates the happy path of this orchestration using BPMN as the modeling notation to map this business process (screenshot from Oracle Integration Cloud - Process).

Process Automation tools can easily manage the human factor of these orchestrations. Different tools manage integration to applications differently. Depending on the integrated system, the task of transacting against this system may be simple, complex and at times not possible at all. If we take a closer look at the step in which we need to upload the SOW document to the opportunity, then we have the following options:

Option a) If the CRM application has an API that allows uploading documents and link it directly to an opportunity, then this transaction can be invoked from the orchestrating business process and automated in a headless manner. When available, this is the preferred way as it is more scalable and it does not come with the overhead of transacting via the application User Interface. Read the complete article here.

Back to top |

|

|

|

Architecture & User Experience & Innovation |

Back to top |

|

|

|

Big day. We announce our brand new product - Katana. Today is first release, which is called 18.1. While working with many enterprise customers we saw a need for a product which would help to integrate machine learning into business applications in more seamless and flexible way. Primary area for machine learning application in enterprise - business automation

Katana offers and will continue to evolve in the following areas:

1. Collection of machine learning models tailored for business automation. This is the core part of Katana. Machine learning models can run on Cloud (AWS SageMaker, Google Cloud Machine Learning, Oracle Cloud, Azure) or on Docker container deployed On-Premise. Main focus is towards business automation with machine learning, including automation for business rules and processes. Goal is to reduce repetitive labor time and simplify complex, redundant business rules maintenance

2. API layer built to help to transform business data into the format which can be passed to machine learning model. This part provides API to simplify machine learning model usage in customer business applications. Read the complete article here.

Back to top |

|

|

|

Learn how to develop an Internet of Things (IoT) application on Oracle IoT Cloud Service that processes your sensor data, predicts future events from the historical sensor data, and persists the analyzed results. Take the course here.

Back to top |

|

|

|

Blockchain technology is in the headlines daily. Is it a panacea for the world’s problems or just the latest tech craze? Join one of the original Bitcoin mining leaders, Emmanuel Abiodun, Software Architect at Oracle, for this informal look at Blockchain and its true value. In this informal tech talk, Mr. Abiodun will separate the reality from the hype. For details please visit the registration page here.

Back to top |

|

|

|

When you build machine learning model, first start with the data — make sure input data is prepared well and it represents true state of what you want machine learning model to learn. Data preparation task takes time, but don’t hurry — quality data is a key for machine learning success. In this post I will go through essential steps required to bring data into right shape to feed it into machine learning algorithm.

Sample dataset and Python notebook for this post can be downloaded from my GitHub repo.

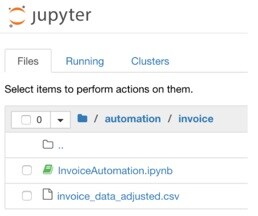

Each row from dataset represents invoice which was sent to customer. Original dataset extracted from ERP system comes with five columns:

customer — customer ID

invoice_date — date when invoice was created

payment_due_date — expected invoice payment date

payment_date — actual invoice payment date

grand_total — invoice total

Read the complete article here.

Back to top |

|

|

|

Additional new content PaaS Partner Community |

- APIs and Microservices at Work in the Real World: Luis Weir (Capgemini) and Wes Davies (Co-op Group) discuss microservices, API management, and the technical aspects of their work on the project that won one of this year's Oracle Cloud Platform Innovation awards.

- Authorize Access to Oracle Fusion Cloud Application API’s with OAuth Tokens

- Machine Learning : Getting the Data Into Shape

- Less is More: Improving Performance by Reducing REST Calls

- Performance of MFT Cloud Service with File Storage Service using a Hybrid Solution Architecture in Oracle Cloud Infrastructure

- Artificial Intelligence Helps CHROs Navigate The Changing World Of Work

- Emerging Tech Shines at Oracle OpenWorld Artificial intelligence (AI), machine learning, and blockchain are incorporated into Oracle Cloud to help enterprises keep pace with change and gain a competitive edge. What more did Oracle executives say at Oracle OpenWorld about emerging tech?

- Opening Minds in Open Source Community “Open source projects—especially large, well-known open source projects—have been slow in in letting women into their teams,” says Guido van Rossum, Python’s “benevolent dictator for life.” Several years ago, he began tackling the diversity problem head-on, offering to mentor women to fix Python’s own disparity. Is it working?

- Molecular Dynamics for the Masses

Using Interactive Scientific’s simulation platform, students can understand molecules by interacting with 3D models and researchers can investigate ways to design and deliver drug molecules. By using compute power and accessibility via Oracle Cloud, Interactive Scientific can make its technology available on smartphones, tablets, PCs, and even virtual reality headsets, says CEO Becky Sage. From zero to cloud in six months.

- Blockchain Apps Get Ever More Palatable Blockchain is so easy to use that “now even your corner brewer” can use it, said Oracle’s Chuck Hollis at Oracle OpenWorld. Indeed, beer maker Alpha Acid Brewing now marks its bottles with blockchain-verified authenticity labels. And it isn’t the only company coming up with creative ways to use the distributed ledger software.

- Global Shipping Consortium Built on Blockchain Technology Nine leading ocean carriers and terminal operators are forming a consortium to develop the Global Shipping Business Network, an open digital platform based on distributed ledger technology. Using blockchain technology, members of the network will be able to carry out what were previously siloed shipment data and management procedures. “This unprecedented transparency will restore trust in the industry,” says Steve Siu, CEO of CargoSmart, a member of the consortium.

Back to top |

|

|

|

|

|

Since a few years we create a special Christmas card for the community. In 2015 we started with a Christmas delivery process implemented in Oracle Process Cloud Service. In 2016 multiple SaaS services have been integrated by drag and drop to process the Christmas presents. Last year Santa Claus handled the Christmas wish list as a dynamic case. This year you can talk to our Santa Claus Chatbot. Make sure you submit your wish list to Santa Claus and Rudolph to bring you the PaaS Forum tickets! #jkwc

Back to top |

|

|

|

|