Voice Input

The Voice Input pattern supports the design of a voice or speech interface. This pattern relies on a solid understanding of the underlying user task and how to apply Automated Speech Recognition (ASR), Natural Language Processing (NLP) and speech output in order to support the user. Three levels of speech interaction are:

- ASR with text output

- ASR combined with NLP and text output

- ASR combined with NLP and spoken aloud text output

All of the above interactions leverage constantly improving ASRs and solve one of the biggest obstacles of mobile interfaces: typing on a soft keyboard is not an efficient input method. Each Mobile OS has a different solutions, but all have similar approaches for voice input:

- Apple Siri

- Google Voice

- Windows 10 Cortana

Appearance

Appearance characteristics for this pattern.

The voice interaction pattern has four basic states:

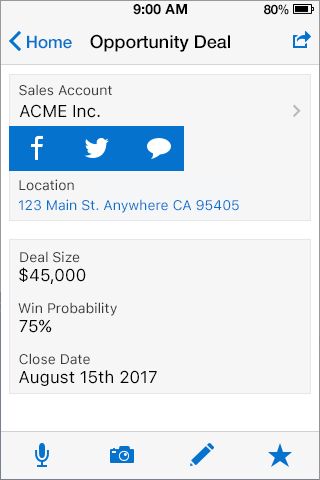

- Standby: This state is before recording has started. A record button or icon is displayed.

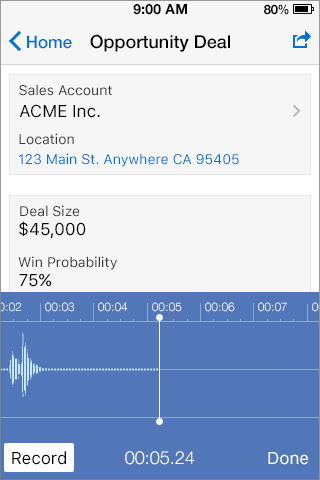

- Active: This state is when the recording has started. An icon is displayed indicating a recording is occurring, with a button or icon indicating the Recording can be stopped. Also a spark chart might display the recording.

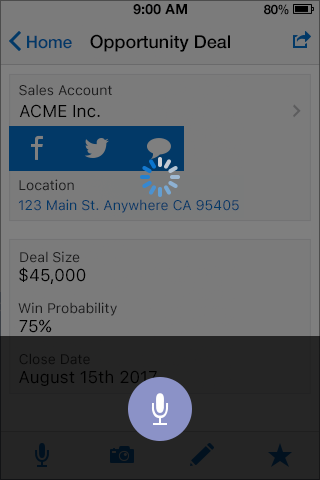

- Processing: This state is when the recording has stopped and it is being saved. An activity indicator shows the processing.

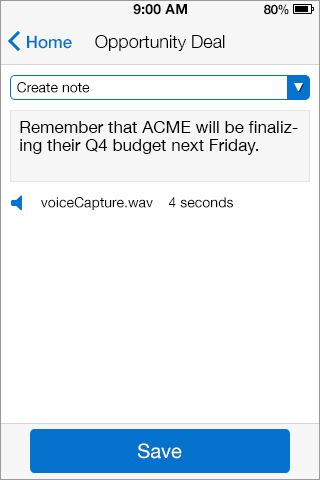

- Output: During this state the recording is available for replay. An icon of a speaker and link with the recording is usually displayed.

Behavior

Common behaviors for this pattern.

- Activation: The microphone is activated and ready to receive speech input by a tap of the microphone icon or an automated prompt.

- Visual Feedback: This indicates that the app is registering the speech input. The visual feedback fluctuates with sound level.

- Tap: The user can tap the microphone again to end speech detection or a pause of 1-2 seconds can automatically end speech input.

- Sample: The user may have the ability to set the sample rate (i.e. quality).

- Edit: The user may have the ability to trim the length of the recording.

Usage

Usage guidelines for this pattern.

General voice and Natural Language Processing (NLP) guidelines:

- Voice works well when the user needs to dictate notes. However, the power of Natural Language Processing (NLP) makes it more efficient to combine steps. For instance a customer can create a note for a customer in one step: Create note for ACME saying "they are interested in closing the deal before May".

- Voice interfaces work best when there are multiple modalities to complete a task. Let the user type to make corrections or initiate a task.

- Focus on voice enabling targeted enterprise mobile tasks.

- Combine search and voice by enabling search interactions where the user simply states find part 1234 without having to navigate to a search screen.

- Voice interfaces can support back-and-forth interactions, but make sure these assisted interactions are as short as possible.

- Give examples of what the user can say so it is clear what types of phrases the application will understand.

Related

Fig 1. Voice Input - Standby

Fig 2. Voice Input - Active

Fig 3. Voice Input - Processing

Fig 4. Voice Input - Output