文章

文章

服务器与存储管理

服务器与存储管理

如何在区域集群上部署 Oracle RAC 11.2.0.3

作者:Vinh Tran

如何创建 Oracle Solaris 区域集群、在区域集群中安装和配置 Oracle Grid Infrastructure 11.2.0.3 和 Oracle Real Application Clusters 11.2.0.3,以及为 Oracle RAC 创建 Oracle Solaris Cluster 资源。

2012 年 6 月发布

|

过程概述

前提条件

使用

cfg 文件创建区域集群为区域集群创建 Oracle RAC 框架资源

在本地区域集群

z11gr2A 中设置根环境为 Oracle 软件创建用户和组

在 Oracle Solaris 区域集群节点中安装 Oracle Grid Infrastructure 11.2.0.3

安装 Oracle Database 11.2.0.3 并创建数据库

创建 Oracle Solaris Cluster 资源

另请参见

关于作者

注:另一篇类似的文章介绍了如何在 Oracle Solaris 区域集群中安装 Oracle Real Application Clusters (Oracle RAC) 11.2.0.2,本文则介绍了如何在 Oracle Solaris 区域集群中安装 Oracle RAC 11.2.0.3,同时考虑了两个 Oracle RAC 版本之间的差异。

简介

Oracle Solaris Cluster 3.3 可用于创建高可用性区域集群。一个区域集群包含多个 Oracle Solaris 区域,每个区域分别驻留在其各自独立的服务器上;组成集群的各个区域链接到单个虚拟集群。因为区域集群之间是相互隔离的,所以各区域集群的安全性将得到加强。此外,由于区域是聚集在一起的,所以各区域所承载应用程序的可用性得到了提高。

通过在区域集群内安装 Oracle RAC,即可同时运行同一 Oracle 数据库的多个实例。这样,您便可以为同一数据库创建不同的数据库版本或进行不同的部署(例如,一个用于生产,一个用于开发)。使用此架构,您还可以将多层解决方案的不同部分部署到不同的虚拟区域集群中。例如,您可以将 Oracle RAC 和应用程序服务器部署在同一集群的不同区域中。使用该方法可以在充分利用 Oracle Solaris Cluster 简化管理的同时将层和管理域相互隔离开来。

有关在区域集群内部署 Oracle RAC 时各种可用配置的信息,请参见“在 Oracle Solaris 区域集群上运行 Oracle Real Application Clusters”白皮书。

注:本文档并非 一本指导如何获得最佳性能的操作指南,不涉及下列主题:

- Oracle Solaris 操作系统安装

- 存储配置

- 网络配置

- Oracle Solaris Cluster 安装

过程概述

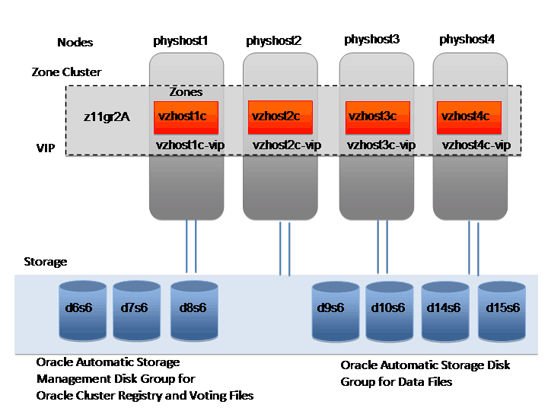

本文介绍了如何利用 Oracle 自动存储管理,在一个 Oracle Solaris Cluster 4 节点区域集群配置中安装 Oracle RAC(参见图 1)。

需要执行三个主要步骤:

- 创建一个区域集群,并在该区域集群内创建特定的 Oracle RAC 基础架构。

- 准备环境,随后安装并配置 Oracle Grid Infrastructure 和 Oracle 数据库。

- 创建 Oracle Solaris Cluster 资源,将这些资源彼此关联并使其联机。

图 1. 四节点区域集群配置

前提条件

请确保满足以下前提条件:

- 安装并配置了 Oracle Solaris 10 9/10(或更新版本)以及 Oracle Solaris Cluster 3.3 5/11。

- 在全局区域内的

/etc/system文件中配置了 Oracle Solaris 10 内核参数。以下是推荐值的示例:

shmsys:shminfo_shmmax 4294967295

- 已知共享磁盘,也称作

/dev/did/rdsk设备。清单 1 是如何从任意集群节点的全局区域中识别共享磁盘的示例:

phyhost1# cldev status === Cluster DID Devices === Device Instance Node Status --------------- ---- ------ /dev/did/rdsk/d1 phyhost1 Ok /dev/did/rdsk/d10 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d14 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d15 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d16 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d17 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d18 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d19 phyhost2 Ok /dev/did/rdsk/d2 phyhost1 Ok /dev/did/rdsk/d20 phyhost2 Ok /dev/did/rdsk/d21 phyhost3 Ok /dev/did/rdsk/d22 phyhost3 Ok /dev/did/rdsk/d23 phyhost4 Ok /dev/did/rdsk/d24 phyhost4 Ok /dev/did/rdsk/d6 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d7 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d8 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok /dev/did/rdsk/d9 phyhost1 Ok phyhost2 Ok phyhost3 Ok phyhost4 Ok清单 1. 识别共享磁盘

输出显示

phyhost1、phyhost2、phyhost3和phyhost4共享磁盘d6、d7、d8、d9、d10、d14、d15、d16、d17和d18。 - Oracle 自动存储管理磁盘组将使用下列共享磁盘来存储 Oracle 集群注册表和表决文件:

/dev/did/rdsk/d6s6 /dev/did/rdsk/d7s6 /dev/did/rdsk/d8s6

- Oracle 自动存储管理磁盘组将使用下列共享磁盘来存储数据文件:

/dev/did/rdsk/d9s6 /dev/did/rdsk/d10s6 /dev/did/rdsk/d14s6 /dev/did/rdsk/d15s6

在本示例中,分片 6 为 102 GB。有关对磁盘大小的要求,请查看 Oracle Grid Infrastructure 安装指南。

- 已设置 Oracle 虚拟 IP (VIP) 和单客户端访问名称 (SCAN) IP 的要求,例如:

vzhost1d,IP 地址 10.134.35.99,用于 SCAN IP。vzhost1c-vip,IP 地址 10.134.35.100,用作vzhost1c的 VIP。vzhost2c-vip,IP 地址 10.134.35.101,用作vzhost2c的 VIP。vzhost3c-vip,IP 地址 10.134.35.102,用作vzhost3c的 VIP。vzhost4c-vip,IP 地址 10.134.35.103,用作vzhost4c的 VIP。

- 公共网络拥有一个 IPMP 组,它有一个活动接口和一个备用接口。下面是为全局区域内名为

SC_ipmp0的 IPMP 组设置/etc/hostname.e1000g0和/etc/hostname.e1000g1的示例:

cat /etc/hostname.e1000g0 phyhost1 netmask + broadcast + group sc_ipmp0 up cat /etc/hostname.e1000g1 group sc_ipmp0 standby up

使用 cfg 文件创建区域集群

要创建 Oracle Solaris 区域集群,请执行以下步骤:

- 按照清单 2 中所示创建

zone.cfg文件。

cat /var/tmp/zone.cfg create set zonepath=/export/zones/z11gR2A add sysid set name_service="NIS{domain_name=solaris.us.oracle.com}" set root_password=passwd end set limitpriv ="default,proc_priocntl,proc_clock_highres,sys_time" add dedicated-cpu set ncpus=16 end add capped-memory set physical=12g set swap=12g set locked=12g end add node set physical-host=phyhost1 set hostname=vzhost1c add net set address=vzhost1c set physical=e1000g0 end end add node set physical-host=phyhost2 set hostname=vzhost2c add net set address=vzhost2c set physical=e1000g0 end end add node set physical-host=phyhost3 set hostname=vzhost3c add net set address=vzhost3c set physical=e1000g0 end end add node set physical-host=phyhost4 set hostname=vzhost4c add net set address=vzhost4c set physical=e1000g0 end end add net set address=vzhost1d end add net set address=vzhost1c-vip end add net set address=vzhost2c-vip end add net set address=vzhost3c-vip end add net set address=vzhost4c-vip end add device set match="/dev/did/rdsk/d6s6" end add device set match="/dev/did/rdsk/d7s6" end add device set match="/dev/did/rdsk/d8s6" end add device set match="/dev/did/rdsk/d9s6" end add device set match="/dev/did/rdsk/d10s6" end add device set match="/dev/did/rdsk/d14s6" end add device set match="/dev/did/rdsk/d15s6" end add device set match="/dev/did/rdks/d16s6" end add device set match="/dev/did/rdsk/d17s6" end add device set match="/dev/did/rdsk/d18s6" end清单 2. 创建

cfg文件 - 如果 SCAN 主机名

vzhost1d解析为多个 IP 地址,则这些 IP 地址中的每一个均应配置独立的全局网络资源。例如,如果 SCAN 解析为三个 IP 地址(10.134.35.97、10.134.35.98 和 10.134.35.99),则应向zone.cfg文件添加以下全局网络资源:

add net set address=10.134.35.97 end add net set address=10.134.35.98 end add net set address=10.134.35.99 end

- 以

root身份运行清单 3 所示命令,从一个节点创建集群:

# clzonecluster configure -f /var/tmp/zone.cfg z11gr2a # clzonecluster install z11gr2A # clzonecluster status === Zone Clusters === --- Zone Cluster Status --- Name Node Name Zone HostName Status Zone Status ---- --------- ------------- ------ ----------- z11gr2A phyhost1 vzhost1c Offline Installed phyhost2 vzhost2c Offline Installed phyhost3 vzhost3c Offline Installed phyhost4 vzhost4c Offline Installed # clzc boot z11gr2A # clzc status === Zone Clusters === --- Zone Cluster Status --- Name Node Name Zone HostName Status Zone Status ---- --------- ------------- ------ ----------- z11gr2A phyhost1 vzhost1c Online Running phyhost2 vzhost2c Online Running phyhost3 vzhost3c Online Running phyhost4 vzhost4c Online Running清单 3. 创建 Oracle Solaris 区域集群

为区域集群创建 Oracle RAC 框架资源

要创建 Oracle RAC 框架,请从一个节点执行以下步骤:

- 以

root身份从一个全局区域集群节点执行clsetup,如清单 4 所示。

# /usr/cluster/bin/clsetup *** Main Menu *** Please select from one of the following options: 1) Quorum 2) Resource groups 3) Data Services 4) Cluster interconnect 5) Device groups and volumes 6) Private hostnames 7) New nodes 8) Other cluster tasks ?) Help with menu options q) Quit Option: 3 *** Data Services Menu *** Please select from one of the following options: * 1) Apache Web Server * 2) Oracle * 3) NFS * 4) Oracle Real Application Clusters * 5) SAP Web Application Server * 6) Highly Available Storage * 7) Logical Hostname * 8) Shared Address * ?) Help * q) Return to the Main Menu Option: 4 *** Oracle Solaris Cluster Support for Oracle RAC *** Oracle Solaris Cluster provides a support layer for running Oracle Real Application Clusters (RAC) database instances. This option allows you to create the RAC framework resource group, storage resources, database resources and administer them, for managing the Oracle Solaris Cluster support for Oracle RAC. After the RAC framework resource group has been created, you can use the Oracle Solaris Cluster system administration tools to administer a RAC framework resource group that is configured on a global cluster. To administer a RAC framework resource group that is configured on a zone cluster, instead use the appropriate Oracle Solaris Cluster command. Is it okay to continue (yes/no) [yes]? Please select from one of the following options: 1) Oracle RAC Create Configuration 2) Oracle RAC Ongoing Administration q) Return to the Data Services Menu Option: 1 >>> Select Oracle Real Application Clusters Location <<< Oracle Real Application Clusters Location: 1) Global Cluster 2) Zone Cluster Option [2]: 2 >>> Select Zone Cluster <<< From the list of zone clusters, select the zone cluster where you would like to configure Oracle Real Application Clusters. 1) z11gr2A ?) Help d) Done Selected: [z11gr2A] >>> Select Oracle Real Application Clusters Components to Configure <<< Select the component of Oracle Real Application Clusters that you are configuring: 1) RAC Framework Resource Group 2) Storage Resources for Oracle Files 3) Oracle Clusterware Framework Resource 4) Oracle Automatic Storage Management (ASM) 5) Resources for Oracle Real Application Clusters Database Instances Option [1]: 1 >>> Verify Prerequisites <<< This wizard guides you through the creation and configuration of the Real Application Clusters (RAC) framework resource group. Before you use this wizard, ensure that the following prerequisites are met: * All pre-installation tasks for Oracle Real Application Clusters are completed. * The Oracle Solaris Cluster nodes are prepared. * The data services packages are installed. * All storage management software that you intend to use is installed and configured on all nodes where Oracle Real Application Clusters is to run. Press RETURN to continue >>> Select Nodes <<< Specify, in order of preference, a list of names of nodes where Oracle Real Application Clusters is to run. If you do not explicitly specify a list, the list defaults to all nodes in an arbitrary order. The following nodes are available on the zone cluster z11skgxn: 1) vzhost1c 2) vzhost2c 3) vzhost3c 4) vzhost4c r) Refresh and Clear All a) All ?) Help d) Done Selected: [vzhost1c, vzhost2c, vzhost3c, vzhost4c] Options: d >>> Select Clusterware Support <<< Select the vendor clusterware support that you would like to use. 1) Native 2) UDLM based Option [1]: 1 >>> Review Oracle Solaris Cluster Objects <<< The following Oracle Solaris Cluster objects will be created. Select the value you are changing: Property Name Current Setting ============= =============== 1) Resource Group Name rac-framework-rg 2) RAC Framework Resource N...rac-framework-rs d) Done ?) Help Option: d >>> Review Configuration of RAC Framework Resource Group <<< The following Oracle Solaris Cluster configuration will be created. To view the details for an option, select the option. Name Value ==== ===== 1) Resource Group Name rac-framework-rg 2) RAC Framework Resource N...rac-framework-rs c) Create Configuration ?) Help Option: c清单 4. 执行

clsetup - 从一个全局区域集群节点验证 Oracle RAC 框架资源:

# clrs status -Z z11gr2A === Cluster Resources === Resource Name Node Name State Status Message ------------- --------- ----- -------------- rac-framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online

在本地区域集群 z11gr2A 中设置根环境

从每个全局区域集群节点(phyhost1、phyhost2、phyhost3 和 phyhost4)执行以下操作:

- 以

root身份登录本地区域节点并执行以下命令:

# /usr/sbin/zlogin z11gr2A [Connected to zone 'z11gr2A' pts/2] Last login: Thu Aug 25 17:30:14 on pts/2 Oracle Corporation SunOS 5.10 Generic Patch January 2005

- (可选)将 root shell 更改为 bash:

# passwd -e Old shell: /sbin/sh New shell: bash passwd: password information changed for root

- 将以下路径包含到

.bash_profile中:/u01/grid/product/11.2.0.3/bin /usr/cluster/bin

为 Oracle 软件创建用户和组

- 以

root身份从各节点执行以下命令:

# groupadd -g 300 oinstall # groupadd -g 301 dba # useradd -g 300 -G 301 -u 302 -d /u01/ora_home -s /usr/bin/bash ouser # mkdir -p /u01/ora_home # chown ouser:oinstall /u01/ora_home # mkdir /u01/oracle # chown ouser:oinstall /u01/oracle # mkdir /u01/grid # chown ouser:oinstall /u01/grid # mkdir /u01/oraInventory # chown ouser:oinstall /u01/oraInventory

- 为软件所有者

ouser创建口令:

# passwd ouser New Password: Re-enter new Password: passwd: password successfully changed for ouser bash-3.00#

- 对于 Oracle 软件所有者环境,以软件所有者

ouser的身份,从每个节点设置 SSH:

$ mkdir .ssh $ chmod 700 .ssh $ cd .ssh $ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/u01/ora_home/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /u01/ora_home/.ssh/id_rsa. Your public key has been saved in /u01/ora_home/.ssh/id_rsa.pub. The key fingerprint is: e6:63:c9:71:fe:d1:8f:71:77:70:97:25:2a:ee:a9:33 local1@vzhost1c $ $ pwd /u01/ora_home/.ssh

- 从第一个节点

vzhost1c,执行以下操作:

$ cat id_rsa.pub >> authorized_keys $ chmod 600 authorized_keys $ scp authorized_keys vzhost2c:/u01/ora_home/.ssh

- 从第二个节点

vzhost2c,执行以下操作:

$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost3c:/u01/ora_home/.ssh

- 从第三个节点

vzhost3c,执行以下操作:

$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost4c:/u01/ora_home/.ssh

- 从第四个节点

vzhost4c,执行以下操作:

$ cd /u01/ora_home/.ssh $ cat id_rsa.pub >> authorized_keys $ scp authorized_keys vzhost1c:/u01/ora_home/.ssh

- 从第一个节点

vzhost1c,执行以下操作:

$ cd /u01/ora_home/.ssh $ scp authorized_keys vzhost2c:/u01/ora_home/.ssh $ scp authorized_keys vzhost3c:/u01/ora_home/.ssh

- 从各个 节点,测试 SSH 设置:

$ ssh vzhost1c date $ ssh vzhost2c date $ ssh vzhost3c date $ ssh vzhost4c date

- 在每个 本地区域集群节点中,以

root身份设置 Oracle 自动存储管理候选磁盘:

# for i in 6 7 8 9 10 14 15 > do > chown ouser:oinstall /dev/did/rdsk/d${i}s6 > chmod 660 /dev/did/rdsk/d${i}s6 > done - 在本地区域集群中,以软件所有者身份,从一个 节点执行以下操作:

$ for i in 6 7 8 9 10 14 15 > do > dd if=/dev/zero of=/dev/did/rdsk/d${i}s6 bs=1024k count=200 > done

在 Oracle Solaris 区域集群节点中安装 Oracle Grid Infrastructure 11.2.0.3

- 以软件所有者身份,在一个 节点上执行以下操作:

$ bash $ export DISPLAY=<hostname>:<n> $ cd <PATH to 11.2.0.3-based software image>/grid/ $ ./runInstaller

- 向 Oracle Universal Installer 提供以下输入:

- 在 Select Installation Option 页上,选择 Install and Configure Oracle Grid Infrastructure for a Cluster。

- 在 Select Installation Type 页上,选择 Advanced Installation。

- 在 Select Product Languages 页上,选择相应语言。

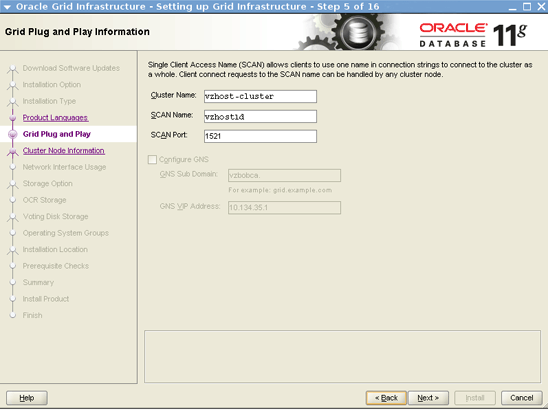

- 在 Grid Plug and Play Information 页上指定以下内容,如图 2 所示:

- 为 Cluster Name 选择 vzhost-cluster。

- 为 SCAN Name 选择 vzhost1d。

- 为 SCAN Port 选择 1521。

图 2. Oracle Grid Infrastructure Plug and Play Information 页

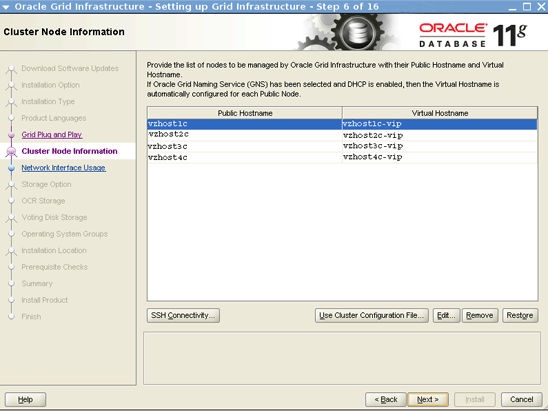

- 在 Cluster Node Information 页上指定以下内容,如图 3 所示:

Public Hostname Virtual Hostname vzhost1c vzhost1c-vip vzhost2c vzhost2c-vip vzhost3c vzhost3c-vip vzhost4c vzhost4c-vip

图 3. Cluster Node Information 页

- 在 Specify Network Interface Usage 页上,接受默认设置。

- 在 Storage Option Information 页上,选择 Oracle Automatic Storage Management 选项。

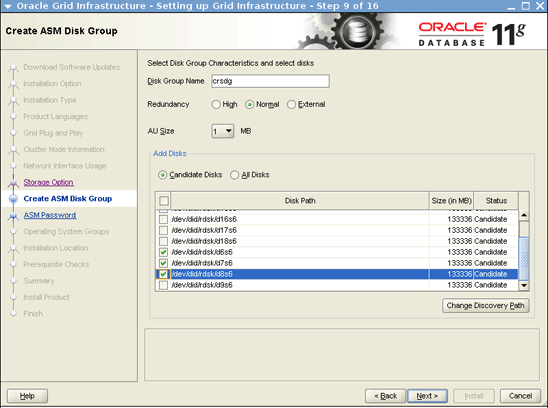

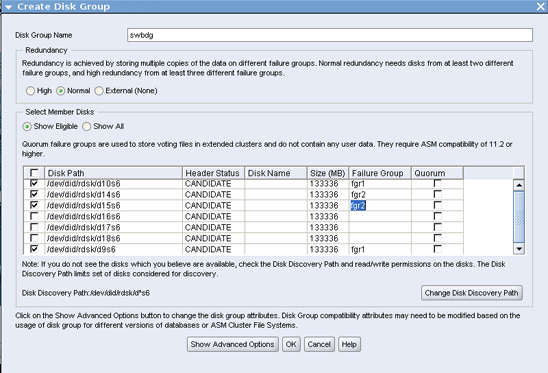

- 在 Create ASM Disk Group 页上,按照图 4 所示执行以下操作:

- 单击 Change Discovery Path。

- 在 Change Discovery Path 对话框中,将发现路径指定为 /dev/did/rdsk/d*s6。

- 将 Disk Group Name 指定为 crsdg。

- 选择 /dev/did/rdsk/d6s6、/dev/did/rdsk/d7s6 和 /dev/did/rdsk/d8s6 作为候选磁盘。

图 4. 创建 Oracle 自动存储管理磁盘组

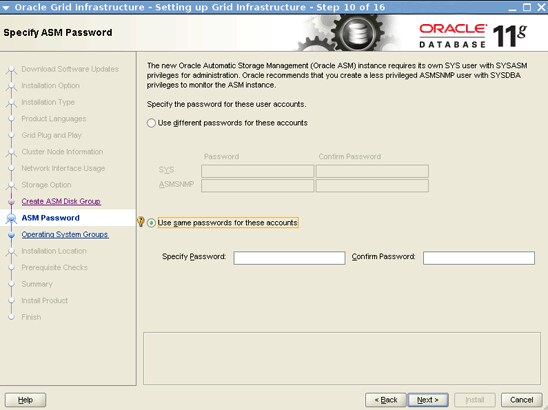

- 在 Specify ASM Password 页上,指定 SYS 和 ASMSNMP 帐户的用户名和口令,如图 5 所示。

图 5. Oracle 自动存储管理密码

- 在 Privileged Operating System Groups 页上,选择以下内容:

- 为 Oracle ASM DBA (OSDBA for ASM) 选择 oinstall。

- (可选)为 Oracle ASM Operator (OSOPER for ASM) Group (Optional) 选择一个组。

- 为 Oracle ASM Administrator (OSASM) Group 选择 oinstall。

- 在 Specify Installation Location 页上,指定以下内容:

- 为 Oracle Base 指定 /u01/oracle。

- 为 Software Location 指定 /u01/grid/product/11.2.0.3。

- 在 Create Inventory 页上,为 Inventory Directory 选择 /u01/oraInventory。

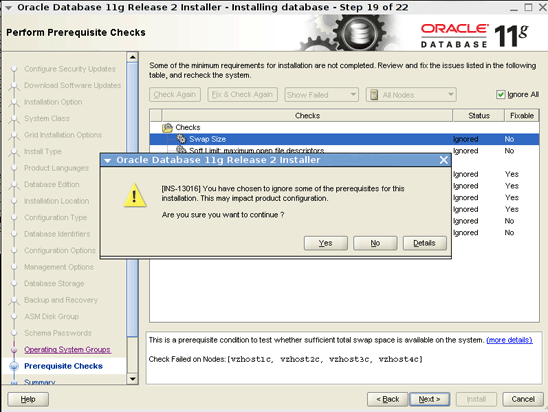

- 在 Perform Prerequisite Checks 页上,选择 Ignore All,如图 6 所示。

图 6. Perform Prerequisite Checks 页

- 在 Summary 页上,单击 Install 开始安装软件。

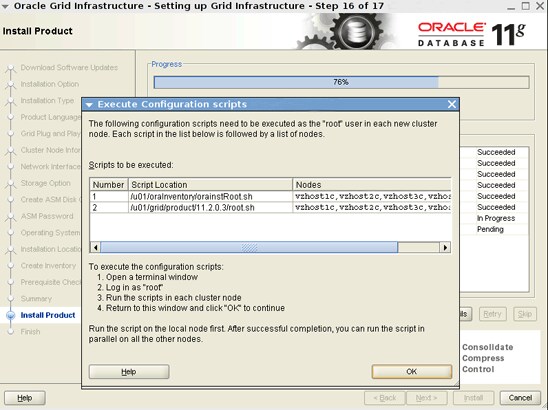

Execute Configuration Scripts 对话框要求您以

root身份执行/u01/oraInventory/orainstRoot.sh和/u01/grid/product/11.2.0.3/root.sh脚本,如图 7 所示。

图 7. Execute Configuration Scripts 对话框

- 打开一个终端窗口,并在各 节点上执行相关脚本,如清单 5 所示。

# /u01/oraInventory/orainstRoot.sh Changing permissions of /u01/oraInventory. Adding read,write permissions for group. Removing read,write,execute permissions for world. Changing groupname of /u01/oraInventory to oinstall. The execution of the script is complete. # /u01/grid/product/11.2.0.3/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= ouser ORACLE_HOME= /u01/grid/product/11.2.0.3 Enter the full pathname of the local bin directory: [/usr/local/bin]: /opt/local /bin Creating /opt/local/bin directory... Copying dbhome to /opt/local/bin ... Copying oraenv to /opt/local/bin ... Copying coraenv to /opt/local/bin ... Creating /var/opt/oracle/oratab file... Entries will be added to the /var/opt/oracle/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/grid/product/11.2.0.3/crs/install/crsconfig_params Creating trace directory User ignored Prerequisites during installation OLR initialization - successful root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding Clusterware entries to inittab CRS-2672: Attempting to start 'ora.mdnsd' on 'vzhost1c' CRS-2676: Start of 'ora.mdnsd' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'vzhost1c' CRS-2676: Start of 'ora.gpnpd' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'vzhost1c' CRS-2672: Attempting to start 'ora.gipcd' on 'vzhost1c' CRS-2676: Start of 'ora.cssdmonitor' on 'vzhost1c' succeeded CRS-2676: Start of 'ora.gipcd' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'vzhost1c' CRS-2672: Attempting to start 'ora.diskmon' on 'vzhost1c' CRS-2676: Start of 'ora.diskmon' on 'vzhost1c' succeeded CRS-2676: Start of 'ora.cssd' on 'vzhost1c' succeeded ASM created and started successfully. Disk Group crsdg created successfully. clscfg: -install mode specified Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. CRS-4256: Updating the profile Successful addition of voting disk 621725b80bf24f53bfc8c56f8eaf3457. Successful addition of voting disk 630c40e735134f2bbf78571ea35bb856. Successful addition of voting disk 4a78fd6ce8564fdbbfceac0f0e9d7c37. Successfully replaced voting disk group with +crsdg. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 621725b80bf24f53bfc8c56f8eaf3457 (/dev/did/rdsk/d6s6) [CRSDG] 2. ONLINE 630c40e735134f2bbf78571ea35bb856 (/dev/did/rdsk/d7s6) [CRSDG] 3. ONLINE 4a78fd6ce8564fdbbfceac0f0e9d7c37 (/dev/did/rdsk/d8s6) [CRSDG] Located 3 voting disk(s). CRS-2672: Attempting to start 'ora.asm' on 'vzhost1c' CRS-2676: Start of 'ora.asm' on 'vzhost1c' succeeded CRS-2672: Attempting to start 'ora.CRSDG.dg' on 'vzhost1c' CRS-2676: Start of 'ora.CRSDG.dg' on 'vzhost1c' succeeded Configure Oracle Grid Infrastructure for a Cluster ... succeeded清单 5. 执行脚本

- 脚本执行完成后,单击 GUI 中的 OK 继续操作。

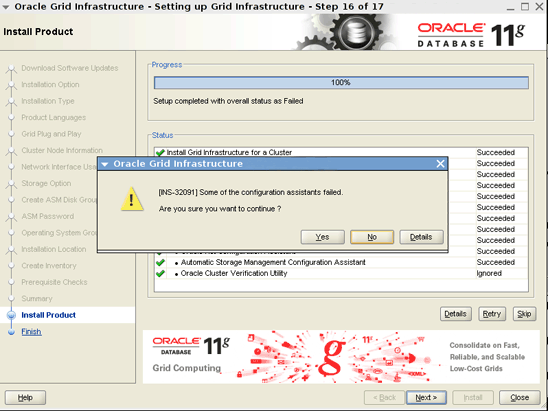

如果集群未使用 DNS 网络客户端服务,则将显示错误消息

[INS-20802] Oracle Cluster Verification Utility failed,如图 8 所示。您可以忽略此错误。oraInstall日志还显示,Cluster Verification Utility 无法解析 SCAN 名。INFO: Checking Single Client Access Name (SCAN)... INFO: Checking TCP connectivity to SCAN Listeners... INFO: TCP connectivity to SCAN Listeners exists on all cluster nodes INFO: Checking name resolution setup for "vzhost1d"... INFO: ERROR: INFO: PRVG-1101 : SCAN name "vzhost1d" failed to resolve INFO: ERROR: INFO: PRVF-4657 : Name resolution setup check for "vzhost1d" (IP address: 10.134. 35.99) failed INFO: ERROR: INFO: PRVF-4663 : Found configuration issue with the 'hosts' entry in the /etc/n sswitch.conf file INFO: Verification of SCAN VIP and Listener setup failed

图 8. 失败消息

- 如需继续,请单击 OK,随后依次单击 Skip 和 Next。

此时将显示另外一条错误消息,如图 9 所示。

图 9. 另一条错误消息

- 单击 Yes 继续。

Oracle Grid Infrastructure 11.2.0.3 的安装至此完成。

- 从任意节点检查 Oracle Grid Infrastructure 资源的状态,如清单 6 所示:

# /u01/grid/product/11.2.0.3/bin/crsctl status res -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.CRSDG.dg ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c ora.LISTENER.lsnr ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c ora.asm ONLINE ONLINE vzhost1c Started ONLINE ONLINE vzhost2c Started ONLINE ONLINE vzhost3c Started ONLINE ONLINE vzhost4c Started ora.gsd OFFLINE OFFLINE vzhost1c OFFLINE OFFLINE vzhost2c OFFLINE OFFLINE vzhost3c OFFLINE OFFLINE vzhost4c ora.net1.network ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c ora.ons ONLINE ONLINE vzhost1c ONLINE ONLINE vzhost2c ONLINE ONLINE vzhost3c ONLINE ONLINE vzhost4c -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE vzhost1c ora.cvu 1 ONLINE ONLINE vzhost2c ora.oc4j 1 ONLINE ONLINE vzhost3c ora.scan1.vip 1 ONLINE ONLINE vzhost4c ora.vzhost1c.vip 1 ONLINE ONLINE vzhost1c ora.vzhost2c.vip 1 ONLINE ONLINE vzhost2c ora.vzhost3c.vip 1 ONLINE ONLINE vzhost3c ora.vzhost4c.vip 1 ONLINE ONLINE vzhost4c清单 6. 检查资源的状态

安装 Oracle Database 11.2.0.3 并创建数据库

- 执行以下命令,启动如图 10 所示的 ASM Configuration Assistant:

$ export DISPLAY=<hostname>:<n> $ /u01/grid/product/11.2.0.3/bin/asmca

图 10. Oracle ASM Configuration Assistant

- 在 ASM Configuration Assistant 中,执行以下操作:

- 在 Disk Groups 选项卡中,单击 Create。

- 在 Create Disk Group 页(如图 11 所示)中执行以下操作,创建名为

swbdg的 Oracle 自动存储管理磁盘组,用于数据库创建:

- 将 Disk Group Name 指定为 swbdg。

- 选择 /dev/did/rdsk/d9s6、/dev/did/rdsk/d10s6、/dev/did/rdsk/d14s6 和 /dev/did/rdsk/d15s6。

- 在 Failure Group 中,将 /dev/did/rdsk/d9s6 和 /dev/did/rdsk/d10s6 指定为 fgr1。

- 在 Failure Group 中,将 /dev/did/rdsk/d14s6 和 /dev/did/rdsk/d15s6 指定为 fgr2。

- 单击 OK 创建磁盘组。

图 11. Create Disk Group 页

swbdg磁盘组创建完毕后,单击 Exit 关闭 ASM Configuration Assistant。

- 从一个 节点运行以下命令,告知 Oracle Universal Installer 安装 Oracle 数据库:

$ export DISPLAY=<hostname>:<n> $ cd <PATH to 11.2.0.3 based software image>/database $ ./runInstaller

- 向 Oracle Universal Installer 提供以下输入:

- 在 Configure Security Updates 页和 Download Software Updates 页上提供所需信息。

- 在 Select Installation Option 页上,选择 Create and configure a database。

- 在 System Class 页上,选择 Server Class。

- 在 Grid Installation Options 页上执行以下操作,如图 12 所示:

- 选择 Oracle Real Application Clusters database installation。

- 确保选中所有节点。

图 12. Oracle Grid Infrastructure 安装选项

- 在 Select Install Type 页上,选择 Advanced install。

- 在 Select Product Languages 页上,选择默认值。

- 在 Select Database Edition 页上,选择 Enterprise Edition。

- 在 Specify Installation Location 页上,指定以下内容:

- 为 Oracle Base 选择 /u01/oracle。

- 为 Software Location 选择 /u01/oracle/product/11.2.0.3。

- 在 Select Configuration Type 页上,选择 General Purpose/Transaction Processing。

- 在 Specify Database Identifiers 页上,指定以下内容:

- 将 Global Database Name 指定为 swb。

- 将 Oracle Service Identifier 指定为 swb。

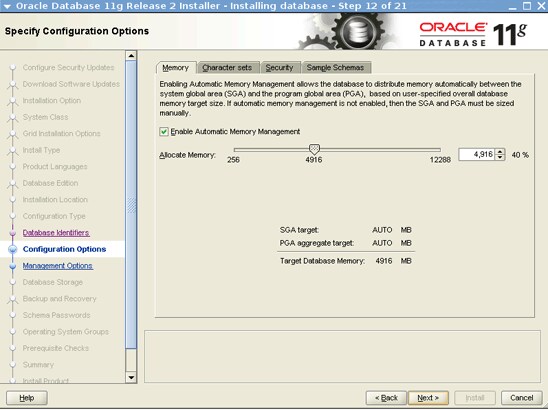

- 在如图 13 所示的 Specify Configuration Options 页中使用默认设置。

图 13. Specify Configuration Options 页

- 在 Specify Management Options 页上,使用默认设置。

- 在 Specify Database Storage Options 页上,选择 Oracle Automatic Storage Management 并为 ASMSNMP 用户指定口令。

- 在 Specify Recovery Options 页上,选择 Do not enable automated backups。

- 在 Select ASM Disk Group 页上,选择 SWBDG 作为磁盘组名称,如图 14 所示。

图 14. 选择磁盘组名称

- 在 Specify Schema Passwords 页上,为 SYS、SYSTEM、SYSMAN 和 DBSNMP 帐户指定口令。

- 在 Privileged Operating System Groups 页上,指定以下内容:

- 为 Database Administrator (OSDBA) Group 选择 dba。

- 为 Database Operator (OSOPER) Group (Optional) 选择 oinstall。

- 在 Perform Prerequisite Checks 页上,选择 Ignore All,如图 15 所示。

图 15. Perform Prerequisite Checks 页

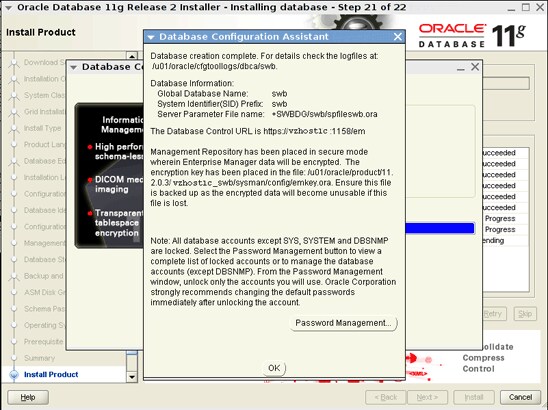

此时将显示一条 INS-13016 消息,如图 16 所示。

- 选择 Yes 继续。

图 16. 前提条件检查过程中显示的消息

- 在如图 17 所示的 Summary 页上,单击 Install。

图 17. Summary 页

- 在 Database Configuration Assistant 对话框中,单击 OK 继续,如图 18 所示。

图 18. Database Configuration Assistant 对话框

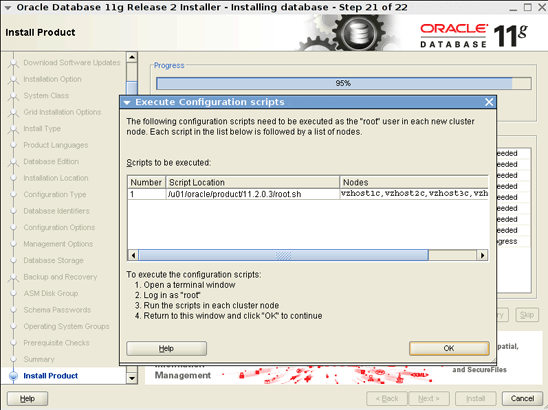

- Execute Configuration Scripts 对话框要求您在各节点上执行

root.sh,如图 19 所示。

图 19. Execute Configuration Scripts 对话框

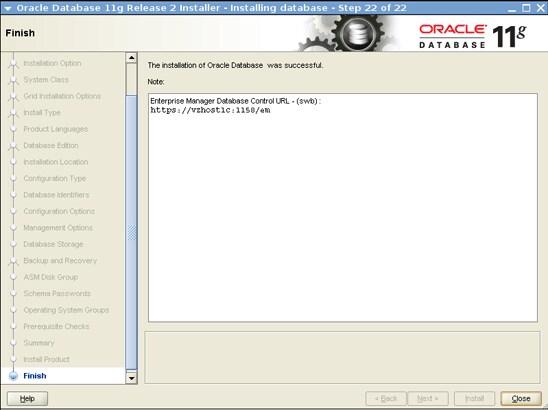

- 在最后一个节点上执行

root.sh脚本之后,单击 OK 继续。Finish 页显示,Oracle 数据库的安装和配置已完成,如图 20 所示。

图 20. Finish 页

创建 Oracle Solaris Cluster 资源

使用以下过程创建 Oracle Solaris Cluster 资源。或者,您也可以使用 clsetup 向导。

- 从一个 区域集群节点执行以下命令,在区域集群中注册

SUNW.crs_framework资源类型:

# clrt register SUNW.crs_framework

- 将

SUNW.crs_framework资源类型的一个实例添加到 Oracle RAC 框架资源组:

# clresource create -t SUNW.crs_framework \ -g rac-framework-rg \ -p resource_dependencies=rac-framework-rs \ crs-framework-rs

- 注册可伸缩的 Oracle 自动存储管理实例代理资源类型:

# clresourcetype register SUNW.scalable_asm_instance_proxy

- 注册 Oracle 自动存储管理磁盘组资源类型:

# clresourcetype register SUNW.scalable_asm_diskgroup_proxy

- 创建资源组

asm-inst-rg和asm-dg-rg:

# clresourcegroup create -S asm-inst-rg asm-dg-rg

- 设置

asm-inst-rg对rac-fmwk-rg的强正相关性:

# clresourcegroup set -p rg_affinities=++rac-framework-rg asm-inst-rg

- 设置

asm-dg-rg对asm-inst-rg的强正相关性:

# clresourcegroup set -p rg_affinities=++asm-inst-rg asm-dg-rg

- 创建一个

SUNW.scalable_asm_instance_proxy资源并设置资源依赖性:

# clresource create asm-inst-rg \ -t SUNW.scalable_asm_instance_proxy \ -p ORACLE_HOME=/u01/grid/product/11.2.0.3 \ -p CRS_HOME=/u01/grid/product/11.2.0.3 \ -p "ORACLE_SID{vzhost1c}"=+ASM1 \ -p "ORACLE_SID{vzhost2c}"=+ASM2 \ -p "ORACLE_SID{vzhost3c}"=+ASM3 \ -p "ORACLE_SID{vzhost4c}"=+ASM4 \ -p resource_dependencies_offline_restart=crs-framework-rs \ -d asm-inst-rs - 将 Oracle 自动存储管理磁盘组资源类型添加到

asm-dg-rg资源组:

# clresource create -g asm-dg-rg -t SUNW.scalable_asm_diskgroup_proxy \ -p asm_diskgroups=CRSDG,SWBDG \ -p resource_dependencies_offline_restart=asm-inst-rs \ -d asm-dg-rs

- 在集群节点上,使

asm-inst-rg资源组在托管状态下联机:

# clresourcegroup online -eM asm-inst-rg

- 在集群节点上,使

asm-dg-rg资源组在托管状态下联机:

# clresourcegroup online -eM asm-dg-rg

- 为 Oracle RAC 数据库服务器创建可伸缩的资源组以包含代理资源:

# clresourcegroup create -S \ -p rg_affinities=++rac-framework-rg,++asm-dg-rg \ rac-swbdb-rg

- 注册

SUNW.scalable_rac_server_proxy资源类型:

# clresourcetype register SUNW.scalable_rac_server_proxy

- 将数据库资源添加到资源组中:

# clresource create -g rac-swbdb-rg \ -t SUNW.scalable_rac_server_proxy \ -p resource_dependencies=rac-framework-rs \ -p resource_dependencies_offline_restart=crs-framework-rs,asm-dg-rs \ -p oracle_home=/u01/oracle/product/11.2.0.3 \ -p crs_home=/u01/grid/product/11.2.0.3 \ -p db_name=swb \ -p "oracle_sid{vzhost1c}"=swb1 \ -p "oracle_sid{vzhost2c}"=swb2 \ -p "oracle_sid{vzhost3c}"=swb3 \ -p "oracle_sid{vzhost4c}"=swb4 \ -d rac-swb-srvr-proxy-rs - 使资源组联机:

# clresourcegroup online -emM rac-swbdb-rg

- 检查集群资源的状态,如清单 7 所示。

# clrs status === Cluster Resources === Resource Name Node Name State Status Message ------------- --------- ----- --------------- crs_framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online rac-framework-rs vzhost1c Online Online vzhost2c Online Online vzhost3c Online Online vzhost4c Online Online asm-inst-rs vzhost1c Online Online - +ASM1 is UP and ENABLED vzhost2c Online Online - +ASM2 is UP and ENABLED vzhost3c Online Online - +ASM3 is UP and ENABLED vzhost4c Online Online - +ASM4 is UP and ENABLED asm-dg-rs vzhost1c Online Online - Mounted: SWBDG vzhost2c Online Online - Mounted: SWBDG vzhost3c Online Online - Mounted: SWBDG vzhost4c Online Online - Mounted: SWBDG rac-swb-srvr-proxy-rs vzhost1c Online Online - Oracle instance UP vzhost2c Online Online - Oracle instance UP vzhost3c Online Online - Oracle instance UP vzhost4c Online Online - Oracle instance UP

另请参见

下面是其他一些资源:

- “在 Oracle Solaris 区域集群上运行 Oracle Real Application Clusters”白皮书:http://www.oracle.com/technetwork/articles/servers-storage-admin/o11-062-rac-solariszonescluster-429206.pdf

- “如何在区域集群上部署 Oracle RAC 11.2.0.2”:http://www.oracle.com/technetwork/articles/servers-storage-admin/rac-zone-cluster-1631291.html

- Oracle Solaris Cluster 3.3 文档库:http://www.oracle.com/technetwork/cn/server-storage/solaris-cluster/documentation/index.html

- 所有 Oracle Solaris Cluster 技术资源:http://www.oracle.com/technetwork/server-storage/solaris-cluster/documentation/cluster-how-to-1389544.html

- Oracle Solaris Cluster 3.3 版本说明:http://docs.oracle.com/cd/E18728_01/html/E22274/index.html

- Oracle Solaris 补丁和更新:http://www.oracle.com/technetwork/server-storage/solaris-cluster/downloads/cluster-archive-168201.html

- Oracle Solaris Cluster 下载:http://www.oracle.com/technetwork/cn/server-storage/solaris-cluster/downloads/index.html

- Oracle Solaris Cluster 培训:http://www.oracle.com/technetwork/cn/server-storage/solaris-cluster/training/index.html

关于作者

Vinh Tran 是 Oracle Solaris Cluster 小组的一名质量工程师。他的职责包括但不限于 Oracle Solaris Cluster 上的 Oracle RAC 认证和资格认定。

| 修订版 1.0,2012 年 6 月 26 日 |

要了解 Oracle 所有技术中与 sysadmin 有关的内容,请在 Facebook 和 Twitter 上关注 OTN Systems。